Tarantool - Documentation¶

Getting started¶

In this chapter, we show how to work with Tarantool as a DBMS – and how to connect to a Tarantool database from other programming languages.

Creating your first Tarantool database¶

First thing, let’s install Tarantool, start it, and create a simple database.

You can install Tarantool and work with it locally or in Docker.

Using a Docker image¶

For trial and test purposes, we recommend using official Tarantool images for Docker. An official image contains a particular Tarantool version and all popular external modules for Tarantool. Everything is already installed and configured in Linux. These images are the easiest way to install and use Tarantool.

Note

If you’re new to Docker, we recommend going over this tutorial before proceeding with this chapter.

Launching a container¶

If you don’t have Docker installed, please follow the official installation guide for your OS.

To start a fully functional Tarantool instance, run a container with minimal options:

$ docker run \

--name mytarantool \

-d -p 3301:3301 \

-v /data/dir/on/host:/var/lib/tarantool \

tarantool/tarantool:1

This command runs a new container named mytarantool.

Docker starts it from an official image named tarantool/tarantool:1,

with Tarantool version 1.10 and all external modules already installed.

Tarantool will be accepting incoming connections on localhost:3301.

You may start using it as a key-value storage right away.

Tarantool persists data inside the container.

To make your test data available after you stop the container,

this command also mounts the host’s directory /data/dir/on/host

(you need to specify here an absolute path to an existing local directory)

in the container’s directory /var/lib/tarantool

(by convention, Tarantool in a container uses this directory to persist data).

So, all changes made in the mounted directory on the container’s side

are applied to the host’s disk.

Tarantool’s database module in the container is already configured and started. You needn’t do it manually, unless you use Tarantool as an application server and run it with an application.

Note

If your container terminates soon after start, follow this page for a possible solution.

Attaching to Tarantool¶

To attach to Tarantool that runs inside the container, say:

$ docker exec -i -t mytarantool console

This command:

- Instructs Tarantool to open an interactive console port for incoming connections.

- Attaches to the Tarantool server inside the container under

adminuser via a standard Unix socket.

Tarantool displays a prompt:

tarantool.sock>

Now you can enter requests on the command line.

Note

On production machines, Tarantool’s interactive mode is for system administration only. But we use it for most examples in this manual, because the interactive mode is convenient for learning.

Creating a database¶

While you’re attached to the console, let’s create a simple test database.

First, create the first space (named tester):

tarantool.sock> s = box.schema.space.create('tester')

Format the created space by specifying field names and types:

tarantool.sock> s:format({

> {name = 'id', type = 'unsigned'},

> {name = 'band_name', type = 'string'},

> {name = 'year', type = 'unsigned'}

> })

Create the first index (named primary):

tarantool.sock> s:create_index('primary', {

> type = 'hash',

> parts = {'id'}

> })

This is a primary index based on the id field of each tuple.

Insert three tuples (our name for records) into the space:

tarantool.sock> s:insert{1, 'Roxette', 1986}

tarantool.sock> s:insert{2, 'Scorpions', 2015}

tarantool.sock> s:insert{3, 'Ace of Base', 1993}

To select a tuple using the primary index, say:

tarantool.sock> s:select{3}

The terminal screen now looks like this:

tarantool.sock> s = box.schema.space.create('tester')

---

...

tarantool.sock> s:format({

> {name = 'id', type = 'unsigned'},

> {name = 'band_name', type = 'string'},

> {name = 'year', type = 'unsigned'}

> })

---

...

tarantool.sock> s:create_index('primary', {

> type = 'hash',

> parts = {'id'}

> })

---

- unique: true

parts:

- type: unsigned

is_nullable: false

fieldno: 1

id: 0

space_id: 512

name: primary

type: HASH

...

tarantool.sock> s:insert{1, 'Roxette', 1986}

---

- [1, 'Roxette', 1986]

...

tarantool.sock> s:insert{2, 'Scorpions', 2015}

---

- [2, 'Scorpions', 2015]

...

tarantool.sock> s:insert{3, 'Ace of Base', 1993}

---

- [3, 'Ace of Base', 1993]

...

tarantool.sock> s:select{3}

---

- - [3, 'Ace of Base', 1993]

...

To add a secondary index based on the band_name field, say:

tarantool.sock> s:create_index('secondary', {

> type = 'hash',

> parts = {'band_name'}

> })

To select tuples using the secondary index, say:

tarantool.sock> s.index.secondary:select{'Scorpions'}

---

- - [2, 'Scorpions', 2015]

...

To drop an index, say:

tarantool> s.index.secondary:drop()

---

...

Stopping a container¶

When the testing is over, stop the container politely:

$ docker stop mytarantool

This was a temporary container, and its disk/memory data were flushed when you stopped it. But since you mounted a data directory from the host in the container, Tarantool’s data files were persisted to the host’s disk. Now if you start a new container and mount that data directory in it, Tarantool will recover all data from disk and continue working with the persisted data.

Using a package manager¶

For production purposes, we recommend to install Tarantool via official package manager. You can choose one of three versions: LTS, stable, or beta. An automatic build system creates, tests and publishes packages for every push into a corresponding branch at Tarantool’s GitHub repository.

To download and install the package that’s appropriate for your OS, start a shell (terminal) and enter the command-line instructions provided for your OS at Tarantool’s download page.

Starting Tarantool¶

To start working with Tarantool, run a terminal and say this:

$ tarantool

$ # by doing this, you create a new Tarantool instance

Tarantool starts in the interactive mode and displays a prompt:

tarantool>

Now you can enter requests on the command line.

Note

On production machines, Tarantool’s interactive mode is for system administration only. But we use it for most examples in this manual, because the interactive mode is convenient for learning.

Creating a database¶

Here is how to create a simple test database after installation.

To let Tarantool store data in a separate place, create a new directory dedicated for tests:

$ mkdir ~/tarantool_sandbox $ cd ~/tarantool_sandbox

You can delete the directory when the tests are over.

Check if the default port the database instance will listen to is vacant.

Depending on the release, during installation Tarantool may start a demonstrative global

example.luainstance that listens to the3301port by default. Theexample.luafile showcases basic configuration and can be found in the/etc/tarantool/instances.enabledor/etc/tarantool/instances.availabledirectories.However, we encourage you to perform the instance startup manually, so you can learn.

Make sure the default port is vacant:

To check if the demonstrative instance is running, say:

$ lsof -i :3301 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME tarantool 6851 root 12u IPv4 40827 0t0 TCP *:3301 (LISTEN)

If it does, kill the corresponding process. In this example:

$ kill 6851

To start Tarantool’s database module and make the instance accept TCP requests on port

3301, say:tarantool> box.cfg{listen = 3301}

Create the first space (named

tester):tarantool> s = box.schema.space.create('tester')

Format the created space by specifying field names and types:

tarantool> s:format({ > {name = 'id', type = 'unsigned'}, > {name = 'band_name', type = 'string'}, > {name = 'year', type = 'unsigned'} > })

Create the first index (named

primary):tarantool> s:create_index('primary', { > type = 'hash', > parts = {'id'} > })

This is a primary index based on the

idfield of each tuple.Insert three tuples (our name for records) into the space:

tarantool> s:insert{1, 'Roxette', 1986} tarantool> s:insert{2, 'Scorpions', 2015} tarantool> s:insert{3, 'Ace of Base', 1993}

To select a tuple using the

primaryindex, say:tarantool> s:select{3}

The terminal screen now looks like this:

tarantool> s = box.schema.space.create('tester') --- ... tarantool> s:format({ > {name = 'id', type = 'unsigned'}, > {name = 'band_name', type = 'string'}, > {name = 'year', type = 'unsigned'} > }) --- ... tarantool> s:create_index('primary', { > type = 'hash', > parts = {'id'} > }) --- - unique: true parts: - type: unsigned is_nullable: false fieldno: 1 id: 0 space_id: 512 name: primary type: HASH ... tarantool> s:insert{1, 'Roxette', 1986} --- - [1, 'Roxette', 1986] ... tarantool> s:insert{2, 'Scorpions', 2015} --- - [2, 'Scorpions', 2015] ... tarantool> s:insert{3, 'Ace of Base', 1993} --- - [3, 'Ace of Base', 1993] ... tarantool> s:select{3} --- - - [3, 'Ace of Base', 1993] ...

To add a secondary index based on the

band_namefield, say:tarantool> s:create_index('secondary', { > type = 'hash', > parts = {'band_name'} > })

To select tuples using the

secondaryindex, say:tarantool> s.index.secondary:select{'Scorpions'} --- - - [2, 'Scorpions', 2015] ...

Now, to prepare for the example in the next section, try this:

tarantool> box.schema.user.grant('guest', 'read,write,execute', 'universe')

Connecting remotely¶

In the request box.cfg{listen = 3301} that we made earlier, the listen

value can be any form of a URI (uniform resource identifier).

In this case, it’s just a local port: port 3301. You can send requests to the

listen URI via:

telnet,- a connector,

- another instance of Tarantool (using the console module), or

- tarantoolctl utility.

Let’s try (4).

Switch to another terminal. On Linux, for example, this means starting another

instance of a Bash shell. You can switch to any working directory in the new

terminal, not necessarily to ~/tarantool_sandbox.

Start the tarantoolctl utility:

$ tarantoolctl connect '3301'

This means “use tarantoolctl connect to connect to the Tarantool instance

that’s listening on localhost:3301”.

Try this request:

localhost:3301> box.space.tester:select{2}

This means “send a request to that Tarantool instance, and display the result”. The result in this case is one of the tuples that was inserted earlier. Your terminal screen should now look like this:

$ tarantoolctl connect 3301

/usr/local/bin/tarantoolctl: connected to localhost:3301

localhost:3301> box.space.tester:select{2}

---

- - [2, 'Scorpions', 2015]

...

You can repeat box.space...:insert{} and box.space...:select{}

indefinitely, on either Tarantool instance.

When the testing is over:

- To drop the space:

s:drop() - To stop

tarantoolctl: Ctrl+C or Ctrl+D - To stop Tarantool (an alternative): the standard Lua function os.exit()

- To stop Tarantool (from another terminal):

sudo pkill -f tarantool - To destroy the test:

rm -r ~/tarantool_sandbox

Connecting from your favorite language¶

Now that you have a Tarantool database, let’s see how to connect to it from Python, PHP and Go.

Connecting from Python¶

Pre-requisites¶

Before we proceed:

Install the

tarantoolmodule. We recommend usingpython3andpip3.Start Tarantool (locally or in Docker) and make sure that you have created and populated a database as we suggested earlier:

box.cfg{listen = 3301} s = box.schema.space.create('tester') s:format({ {name = 'id', type = 'unsigned'}, {name = 'band_name', type = 'string'}, {name = 'year', type = 'unsigned'} }) s:create_index('primary', { type = 'hash', parts = {'id'} }) s:create_index('secondary', { type = 'hash', parts = {'band_name'} }) s:insert{1, 'Roxette', 1986} s:insert{2, 'Scorpions', 2015} s:insert{3, 'Ace of Base', 1993}

Important

Please do not close the terminal window where Tarantool is running – you’ll need it soon.

In order to connect to Tarantool as an administrator, reset the password for the

adminuser:box.schema.user.passwd('pass')

Connecting to Tarantool¶

To get connected to the Tarantool server, say this:

>>> import tarantool

>>> connection = tarantool.connect("localhost", 3301)

You can also specify the user name and password, if needed:

>>> tarantool.connect("localhost", 3301, user=username, password=password)

The default user is guest.

Manipulating the data¶

A space is a container for tuples. To access a space as a named object,

use connection.space:

>>> tester = connection.space('tester')

Inserting data¶

To insert a tuple into a space, use insert:

>>> tester.insert((4, 'ABBA', 1972))

[4, 'ABBA', 1972]

Querying data¶

Let’s start with selecting a tuple by the primary key

(in our example, this is the index named primary, based on the id field

of each tuple). Use select:

>>> tester.select(4)

[4, 'ABBA', 1972]

Next, select tuples by a secondary key. For this purpose, you need to specify the number or name of the index.

First off, select tuples using the index number:

>>> tester.select('Scorpions', index=1)

[2, 'Scorpions', 2015]

(We say index=1 because index numbers in Tarantool start with 0,

and we’re using our second index here.)

Now make a similar query by the index name and make sure that the result is the same:

>>> tester.select('Scorpions', index='secondary')

[2, 'Scorpions', 2015]

Finally, select all the tuples in a space via a select with no

arguments:

>>> tester.select()

Updating data¶

Update a field value using update:

>>> tester.update(4, [('=', 1, 'New group'), ('+', 2, 2)])

This updates the value of field 1 and increases the value of field 2

in the tuple with id = 4. If a tuple with this id doesn’t exist,

Tarantool will return an error.

Now use replace to totally replace the tuple that matches the

primary key. If a tuple with this primary key doesn’t exist, Tarantool will

do nothing.

>>> tester.replace((4, 'New band', 2015))

You can also update the data using upsert that works similarly

to update, but creates a new tuple if the old one was not found.

>>> tester.upsert((4, 'Another band', 2000), [('+', 2, 5)])

This increases by 5 the value of field 2 in the tuple with id = 4, – or

inserts the tuple (4, "Another band", 2000) if a tuple with this id

doesn’t exist.

Deleting data¶

To delete a tuple, use delete(primary_key):

>>> tester.delete(4)

[4, 'New group', 2012]

To delete all tuples in a space (or to delete an entire space), use call.

We’ll focus on this function in more detail in the

next section.

To delete all tuples in a space, call space:truncate:

>>> connection.call('box.space.tester:truncate', ())

To delete an entire space, call space:drop.

This requires connecting to Tarantool as the admin user:

>>> connection.call('box.space.tester:drop', ())

Executing stored procedures¶

Switch to the terminal window where Tarantool is running.

Note

If you don’t have a terminal window with remote connection to Tarantool, check out these guides:

Define a simple Lua function:

function sum(a, b)

return a + b

end

Now we have a Lua function defined in Tarantool. To invoke this function from

python, use call:

>>> connection.call('sum', (3, 2))

5

To send bare Lua code for execution, use eval:

>>> connection.eval('return 4 + 5')

9

Connecting from PHP¶

Pre-requisites¶

Before we proceed:

Install the

tarantool/clientlibrary.Start Tarantool (locally or in Docker) and make sure that you have created and populated a database as we suggested earlier:

box.cfg{listen = 3301} s = box.schema.space.create('tester') s:format({ {name = 'id', type = 'unsigned'}, {name = 'band_name', type = 'string'}, {name = 'year', type = 'unsigned'} }) s:create_index('primary', { type = 'hash', parts = {'id'} }) s:create_index('secondary', { type = 'hash', parts = {'band_name'} }) s:insert{1, 'Roxette', 1986} s:insert{2, 'Scorpions', 2015} s:insert{3, 'Ace of Base', 1993}

Important

Please do not close the terminal window where Tarantool is running – you’ll need it soon.

In order to connect to Tarantool as an administrator, reset the password for the

adminuser:box.schema.user.passwd('pass')

Connecting to Tarantool¶

To configure a connection to the Tarantool server, say this:

use Tarantool\Client\Client;

require __DIR__.'/vendor/autoload.php';

$client = Client::fromDefaults();

The connection itself will be established at the first request. You can also specify the user name and password, if needed:

$client = Client::fromOptions([

'uri' => 'tcp://127.0.0.1:3301',

'username' => '<username>',

'password' => '<password>'

]);

The default user is guest.

Manipulating the data¶

A space is a container for tuples. To access a space as a named object,

use getSpace:

$tester = $client->getSpace('tester');

Inserting data¶

To insert a tuple into a space, use insert:

$result = $tester->insert([4, 'ABBA', 1972]);

Querying data¶

Let’s start with selecting a tuple by the primary key

(in our example, this is the index named primary, based on the id field

of each tuple). Use select:

use Tarantool\Client\Schema\Criteria;

$result = $tester->select(Criteria::key([4]));

printf(json_encode($result));

[[4, 'ABBA', 1972]]

Next, select tuples by a secondary key. For this purpose, you need to specify the number or name of the index.

First off, select tuples using the index number:

$result = $tester->select(Criteria::index(1)->andKey(['Scorpions']));

printf(json_encode($result));

[2, 'Scorpions', 2015]

(We say index(1) because index numbers in Tarantool start with 0,

and we’re using our second index here.)

Now make a similar query by the index name and make sure that the result is the same:

$result = $tester->select(Criteria::index('secondary')->andKey(['Scorpions']));

printf(json_encode($result));

[2, 'Scorpions', 2015]

Finally, select all the tuples in a space via a select:

$result = $tester->select(Criteria::allIterator());

Updating data¶

Update a field value using update:

use Tarantool\Client\Schema\Operations;

$result = $tester->update([4], Operations::set(1, 'New group')->andAdd(2, 2));

This updates the value of field 1 and increases the value of field 2

in the tuple with id = 4. If a tuple with this id doesn’t exist,

Tarantool will return an error.

Now use replace to totally replace the tuple that matches the

primary key. If a tuple with this primary key doesn’t exist, Tarantool will

do nothing.

$result = $tester->replace([4, 'New band', 2015]);

You can also update the data using upsert that works similarly

to update, but creates a new tuple if the old one was not found.

use Tarantool\Client\Schema\Operations;

$tester->upsert([4, 'Another band', 2000], Operations::add(2, 5));

This increases by 5 the value of field 2 in the tuple with id = 4, – or

inserts the tuple (4, "Another band", 2000) if a tuple with this id

doesn’t exist.

Deleting data¶

To delete a tuple, use delete(primary_key):

$result = $tester->delete([4]);

To delete all tuples in a space (or to delete an entire space), use call.

We’ll focus on this function in more detail in the

next section.

To delete all tuples in a space, call space:truncate:

$result = $client->call('box.space.tester:truncate');

To delete an entire space, call space:drop.

This requires connecting to Tarantool as the admin user:

$result = $client->call('box.space.tester:drop');

Executing stored procedures¶

Switch to the terminal window where Tarantool is running.

Note

If you don’t have a terminal window with remote connection to Tarantool, check out these guides:

Define a simple Lua function:

function sum(a, b)

return a + b

end

Now we have a Lua function defined in Tarantool. To invoke this function from

php, use call:

$result = $client->call('sum', 3, 2);

To send bare Lua code for execution, use eval:

$result = $client->evaluate('return 4 + 5');

Connecting from Go¶

Pre-requisites¶

Before we proceed:

Install the

go-tarantoollibrary.Start Tarantool (locally or in Docker) and make sure that you have created and populated a database as we suggested earlier:

box.cfg{listen = 3301} s = box.schema.space.create('tester') s:format({ {name = 'id', type = 'unsigned'}, {name = 'band_name', type = 'string'}, {name = 'year', type = 'unsigned'} }) s:create_index('primary', { type = 'hash', parts = {'id'} }) s:create_index('secondary', { type = 'hash', parts = {'band_name'} }) s:insert{1, 'Roxette', 1986} s:insert{2, 'Scorpions', 2015} s:insert{3, 'Ace of Base', 1993}

Important

Please do not close the terminal window where Tarantool is running – you’ll need it soon.

In order to connect to Tarantool as an administrator, reset the password for the

adminuser:box.schema.user.passwd('pass')

Connecting to Tarantool¶

To get connected to the Tarantool server, write a simple Go program:

package main

import (

"fmt"

"github.com/tarantool/go-tarantool"

)

func main() {

conn, err := tarantool.Connect("127.0.0.1:3301", tarantool.Opts{

User: "admin",

Pass: "pass",

})

if err != nil {

log.Fatalf("Connection refused")

}

defer conn.Close()

// Your logic for interacting with the database

}

The default user is guest.

Manipulating the data¶

Inserting data¶

To insert a tuple into a space, use Insert:

resp, err = conn.Insert("tester", []interface{}{4, "ABBA", 1972})

This inserts the tuple (4, "ABBA", 1972) into a space named tester.

The response code and data are available in the tarantool.Response structure:

code := resp.Code

data := resp.Data

Querying data¶

To select a tuple from a space, use Select:

resp, err = conn.Select("tester", "primary", 0, 1, tarantool.IterEq, []interface{}{4})

This selects a tuple by the primary key with offset = 0 and limit = 1

from a space named tester (in our example, this is the index named primary,

based on the id field of each tuple).

Next, select tuples by a secondary key.

resp, err = conn.Select("tester", "secondary", 0, 1, tarantool.IterEq, []interface{}{"ABBA"})

Finally, it would be nice to select all the tuples in a space. But there is no one-liner for this in Go; you would need a script like this one.

For more examples, see https://github.com/tarantool/go-tarantool#usage

Updating data¶

Update a field value using Update:

resp, err = conn.Update("tester", "primary", []interface{}{4}, []interface{}{[]interface{}{"+", 2, 3}})

This increases by 3 the value of field 2 in the tuple with id = 4.

If a tuple with this id doesn’t exist, Tarantool will return an error.

Now use Replace to totally replace the tuple that matches the

primary key. If a tuple with this primary key doesn’t exist, Tarantool will

do nothing.

resp, err = conn.Replace("tester", []interface{}{4, "New band", 2011})

You can also update the data using Upsert that works similarly

to Update, but creates a new tuple if the old one was not found.

resp, err = conn.Upsert("tester", []interface{}{4, "Another band", 2000}, []interface{}{[]interface{}{"+", 2, 5}})

This increases by 5 the value of the third field in the tuple with id = 4, – or

inserts the tuple (4, "Another band", 2000) if a tuple with this id

doesn’t exist.

Deleting data¶

To delete a tuple, use сonnection.Delete:

resp, err = conn.Delete("tester", "primary", []interface{}{4})

To delete all tuples in a space (or to delete an entire space), use Call.

We’ll focus on this function in more detail in the

next section.

To delete all tuples in a space, call space:truncate:

resp, err = conn.Call("box.space.tester:truncate", []interface{}{})

To delete an entire space, call space:drop.

This requires connecting to Tarantool as the admin user:

resp, err = conn.Call("box.space.tester:drop", []interface{}{})

Executing stored procedures¶

Switch to the terminal window where Tarantool is running.

Note

If you don’t have a terminal window with remote connection to Tarantool, check out these guides:

Define a simple Lua function:

function sum(a, b)

return a + b

end

Now we have a Lua function defined in Tarantool. To invoke this function from

go, use Call:

resp, err = conn.Call("sum", []interface{}{2, 3})

To send bare Lua code for execution, use Eval:

resp, err = connection.Eval("return 4 + 5", []interface{}{})

Creating your first Tarantool Cartridge application¶

Here we’ll walk you through developing a simple cluster application.

First, set up the development environment.

Next, create an application named myapp. Say:

$ cartridge create --name myapp

This will create a Tarantool Cartridge application in the ./myapp directory,

with a handful of

template files and directories

inside.

Go inside and make a dry run:

$ cd ./myapp

$ cartridge build

$ cartridge start

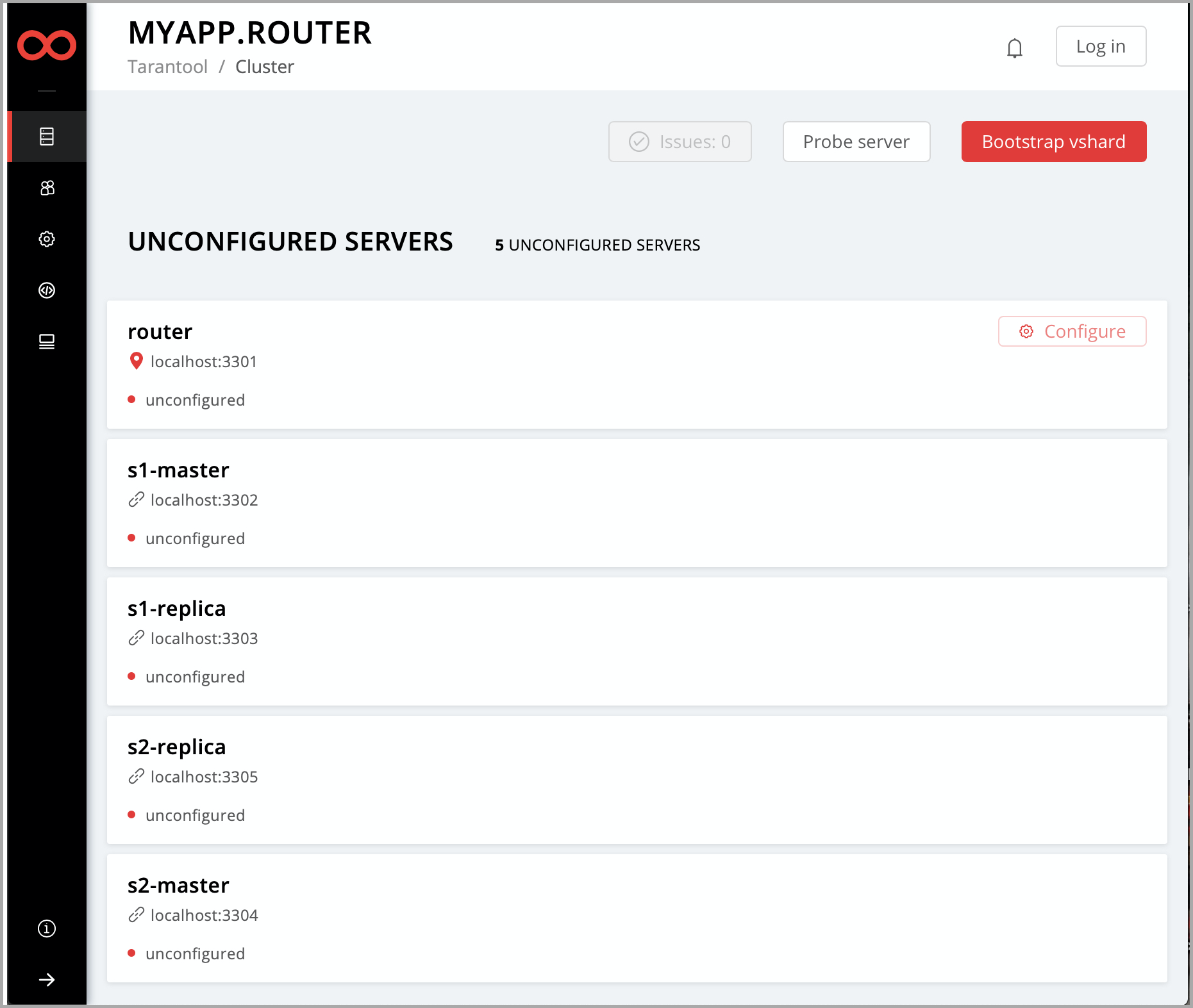

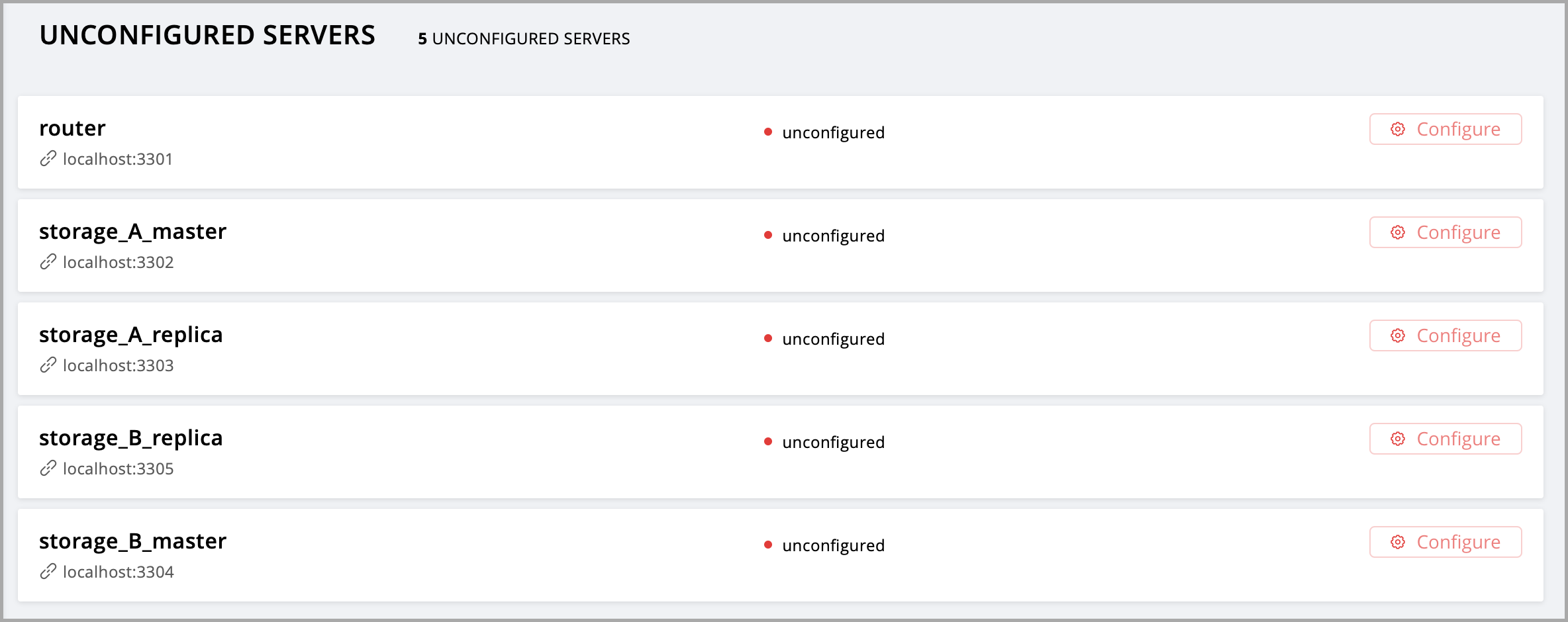

This will build the application locally, start 5 instances of Tarantool, and run the application as it is, with no business logic yet.

Why 5 instances? See the instances.yml file in your application directory.

It contains the configuration of all instances

that you can use in the cluster. By default, it defines configuration for 5

Tarantool instances.

myapp.router:

workdir: ./tmp/db_dev/3301

advertise_uri: localhost:3301

http_port: 8081

myapp.s1-master:

workdir: ./tmp/db_dev/3302

advertise_uri: localhost:3302

http_port: 8082

myapp.s1-replica:

workdir: ./tmp/db_dev/3303

advertise_uri: localhost:3303

http_port: 8083

myapp.s2-master:

workdir: ./tmp/db_dev/3304

advertise_uri: localhost:3304

http_port: 8084

myapp.s2-replica:

workdir: ./tmp/db_dev/3305

advertise_uri: localhost:3305

http_port: 8085

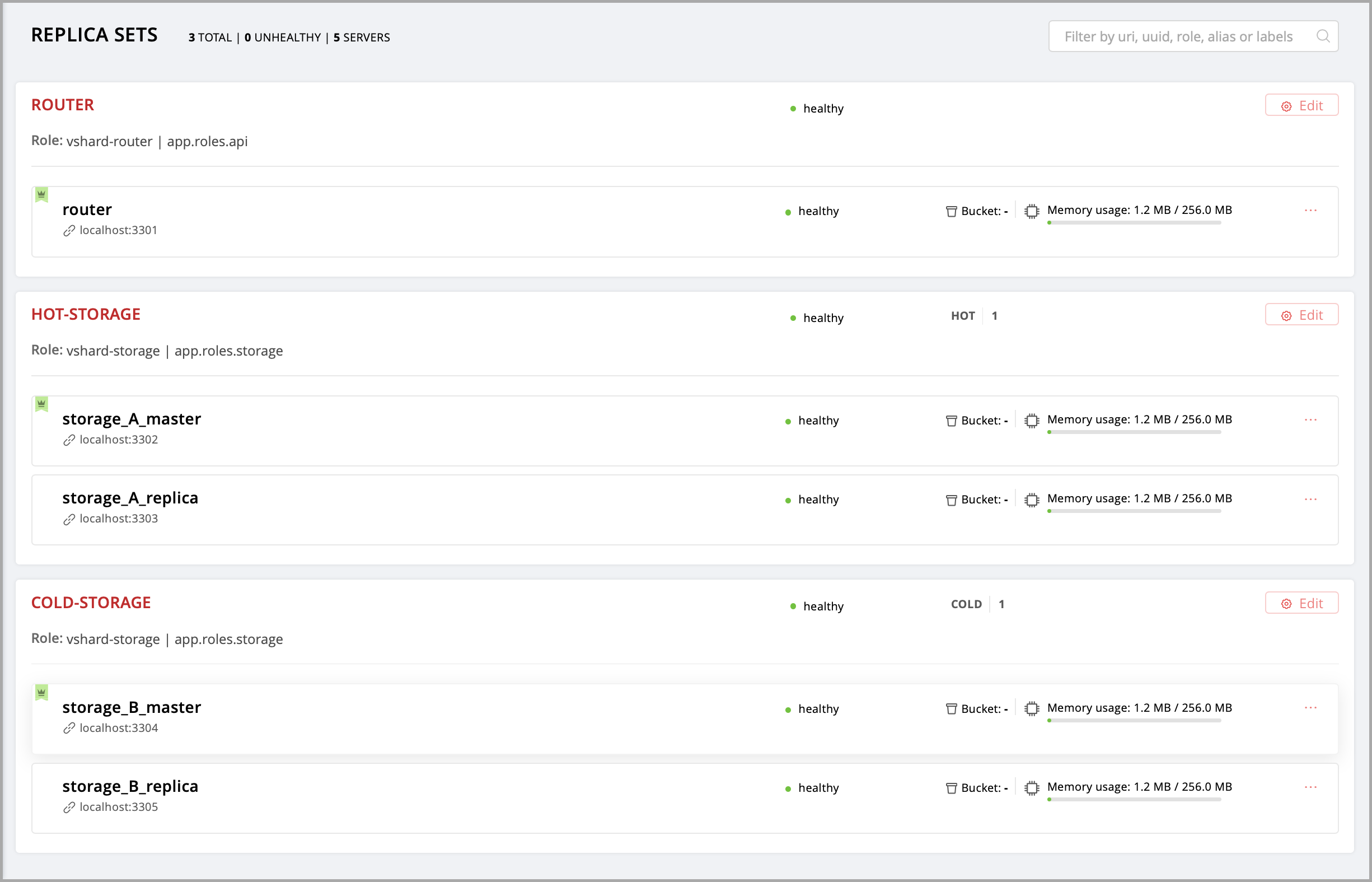

You can already see these instances in the cluster management web interface at

http://localhost:8081 (here 8081 is the HTTP port of the first instance

specified in instances.yml).

Okay, press Ctrl + C to stop the cluster for a while.

Now it’s time to add some business logic to your application. This will be an evergreen “Hello world!”” – just to keep things simple.

Rename the template file app/roles/custom.lua to hello-world.lua.

$ mv app/roles/custom.lua app/roles/hello-world.lua

This will be your role. In Tarantool Cartridge, a role is a Lua module that implements some instance-specific functions and/or logic. Further on we’ll show how to add code to a role, build it, enable and test.

There is already some code in the role’s init() function.

local function init(opts) -- luacheck: no unused args

-- if opts.is_master then

-- end

local httpd = cartridge.service_get('httpd')

httpd:route({method = 'GET', path = '/hello'}, function()

return {body = 'Hello world!'}

end)

return true

end

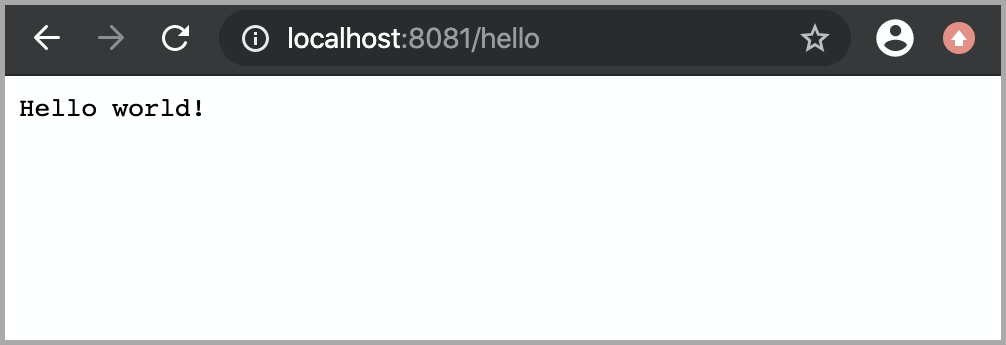

This exports an HTTP endpoint /hello. For example, http://localhost:8081/hello

if you address the first instance from the instances.yml file.

If you open it in a browser after enabling the role (we’ll do it here a bit later),

you’ll see “Hello world!” on the page.

Let’s add some more code there.

local function init(opts) -- luacheck: no unused args

-- if opts.is_master then

-- end

local httpd = cartridge.service_get('httpd')

httpd:route({method = 'GET', path = '/hello'}, function()

return {body = 'Hello world!'}

end)

local log = require('log')

log.info('Hello world!')

return true

end

This writes “Hello, world!” to the console when the role gets enabled, so you’ll have a chance to spot this. No rocket science.

Next, amend role_name in the “return” section of the hello-world.lua file.

This text will be displayed as a label for your role in the cluster management

web interface.

return {

role_name = 'Hello world!',

init = init,

stop = stop,

validate_config = validate_config,

apply_config = apply_config,

}

The final thing to do before you can run the application is to add your role to

the list of available cluster roles in the init.lua file.

local ok, err = cartridge.cfg({

workdir = 'tmp/db',

roles = {

'cartridge.roles.vshard-storage',

'cartridge.roles.vshard-router',

'app.roles.hello-world'

},

cluster_cookie = 'myapp-cluster-cookie',

})

Now the cluster will be aware of your role.

Why app.roles.hello-world? By default, the role name here should match the

path from the application root (./myapp) to the role file

(app/roles/hello-world.lua).

Fine! Your role is ready. Re-build the application and re-start the cluster now:

$ cartridge build

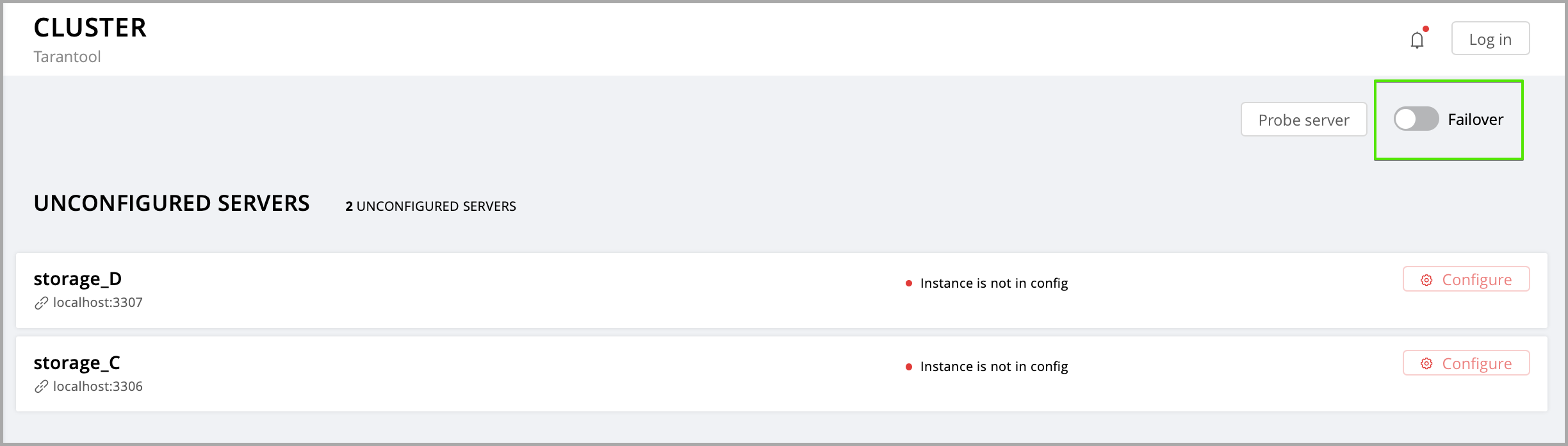

$ cartridge start

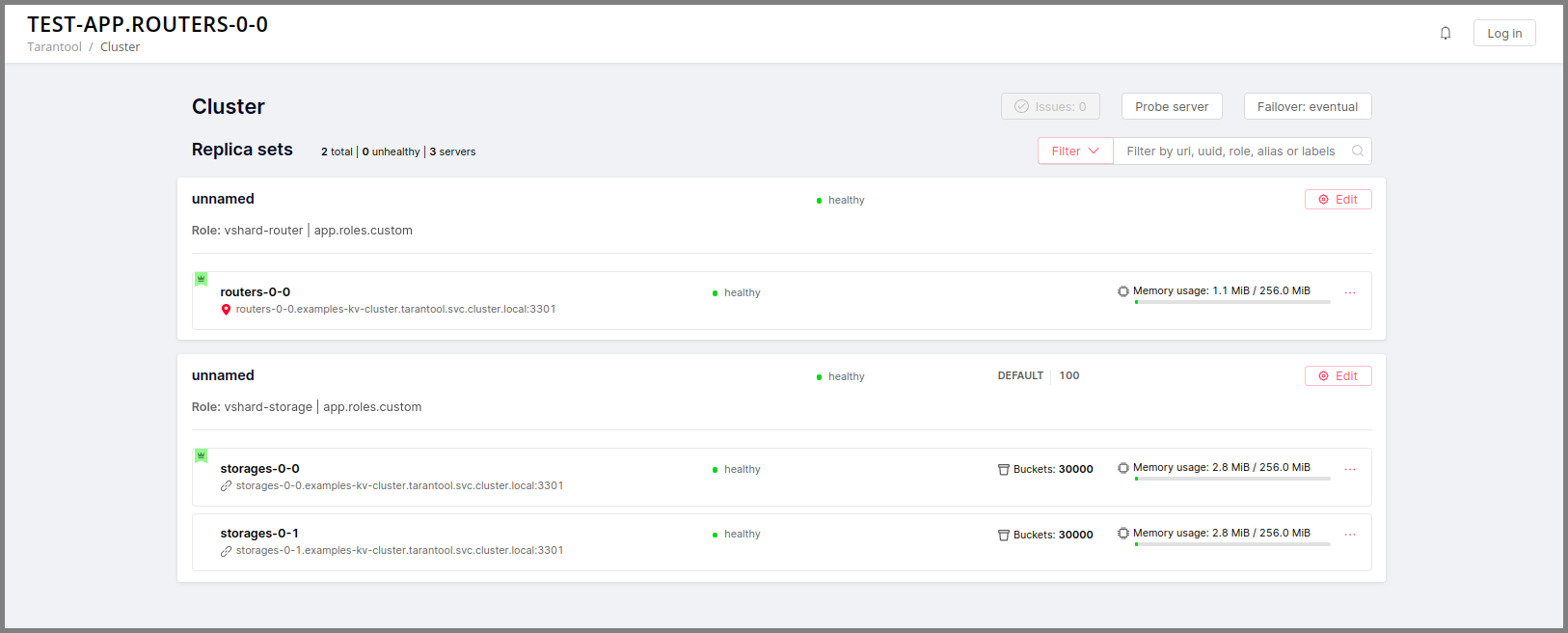

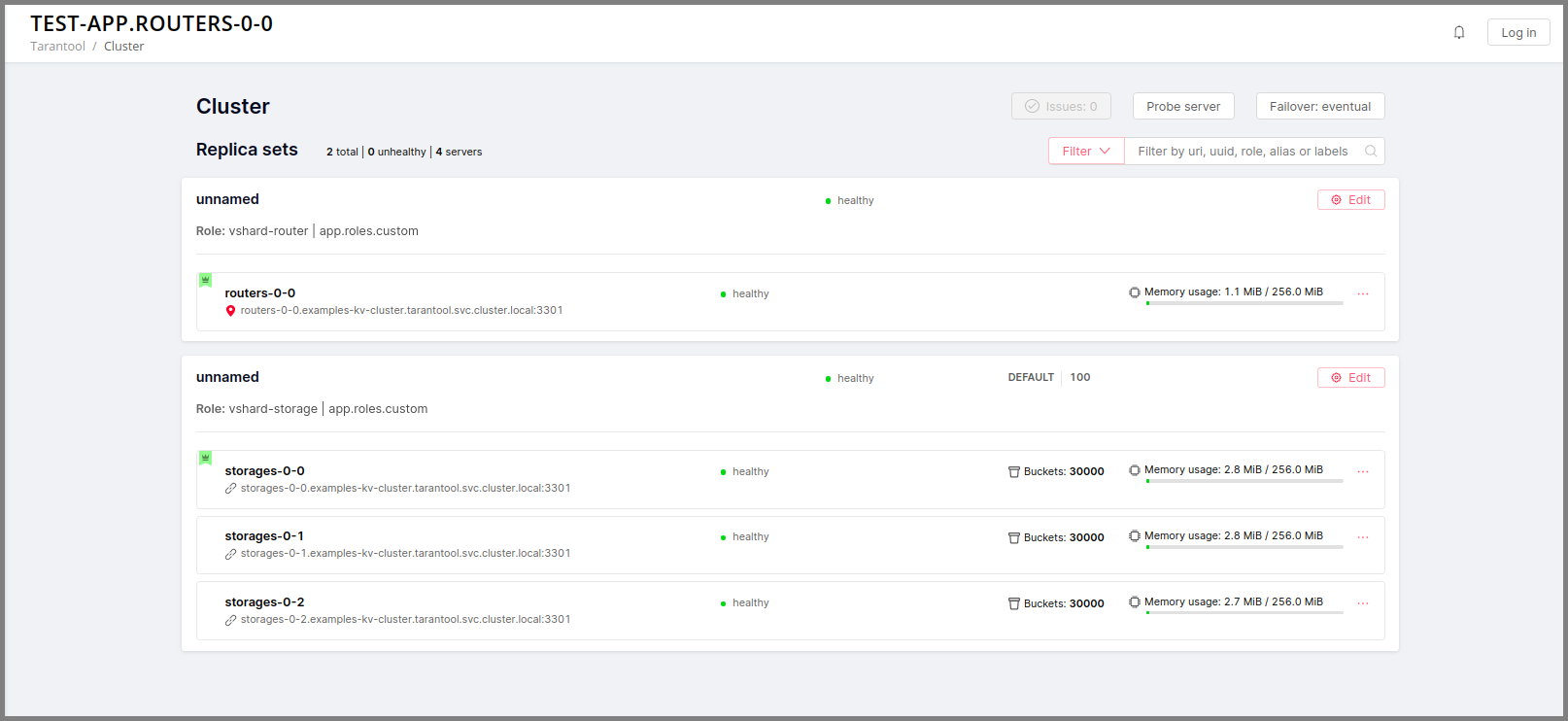

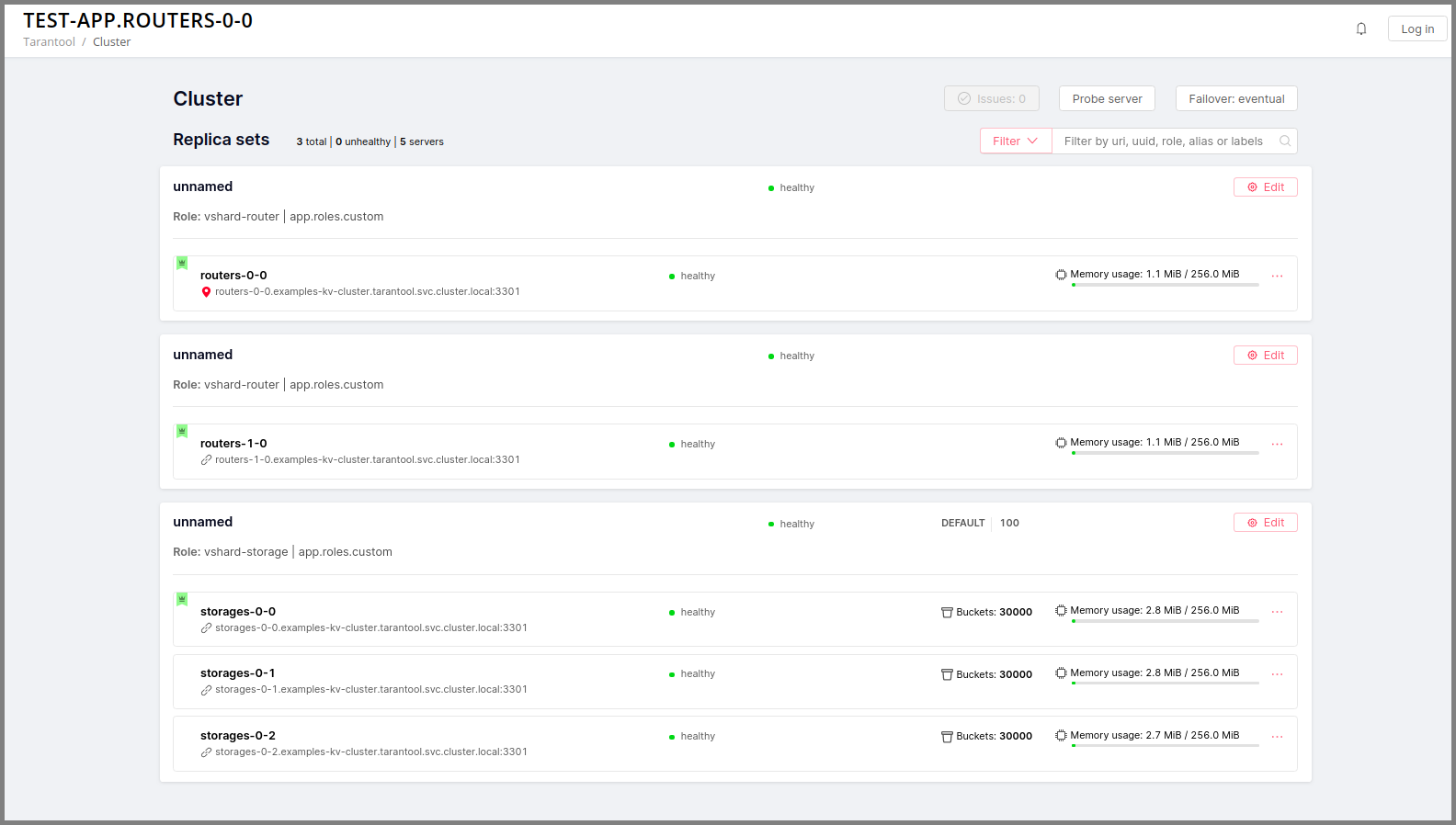

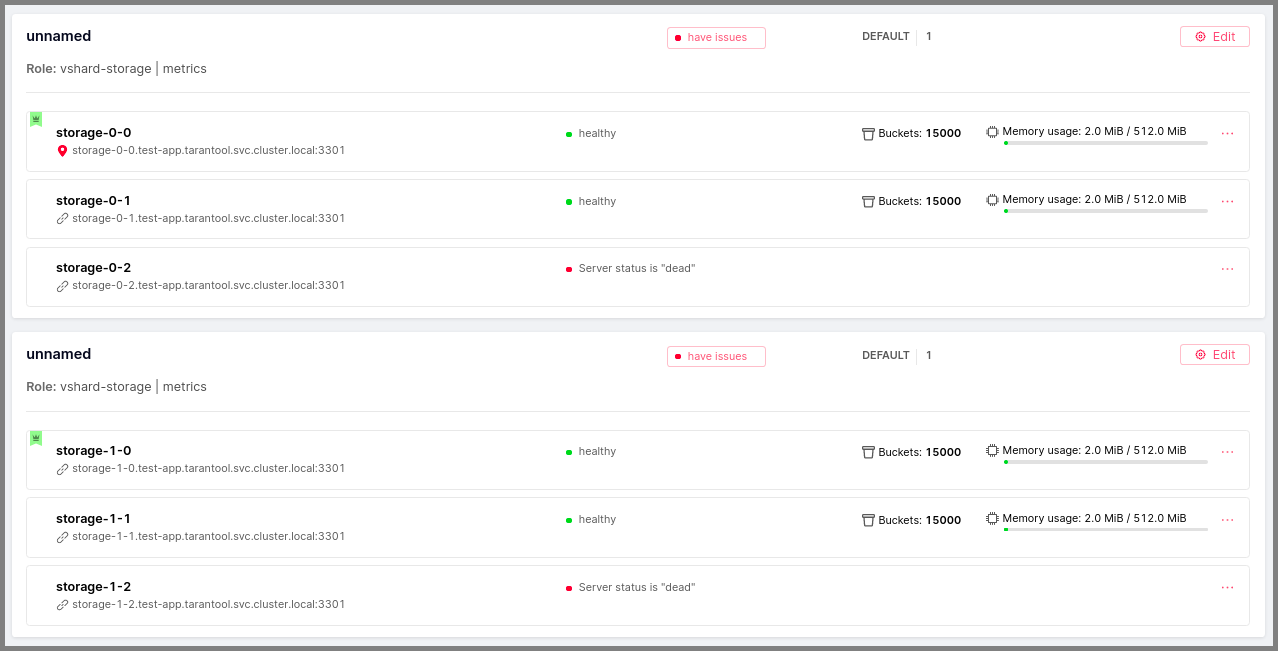

Now all instances are up, but idle, waiting for you to enable roles for them.

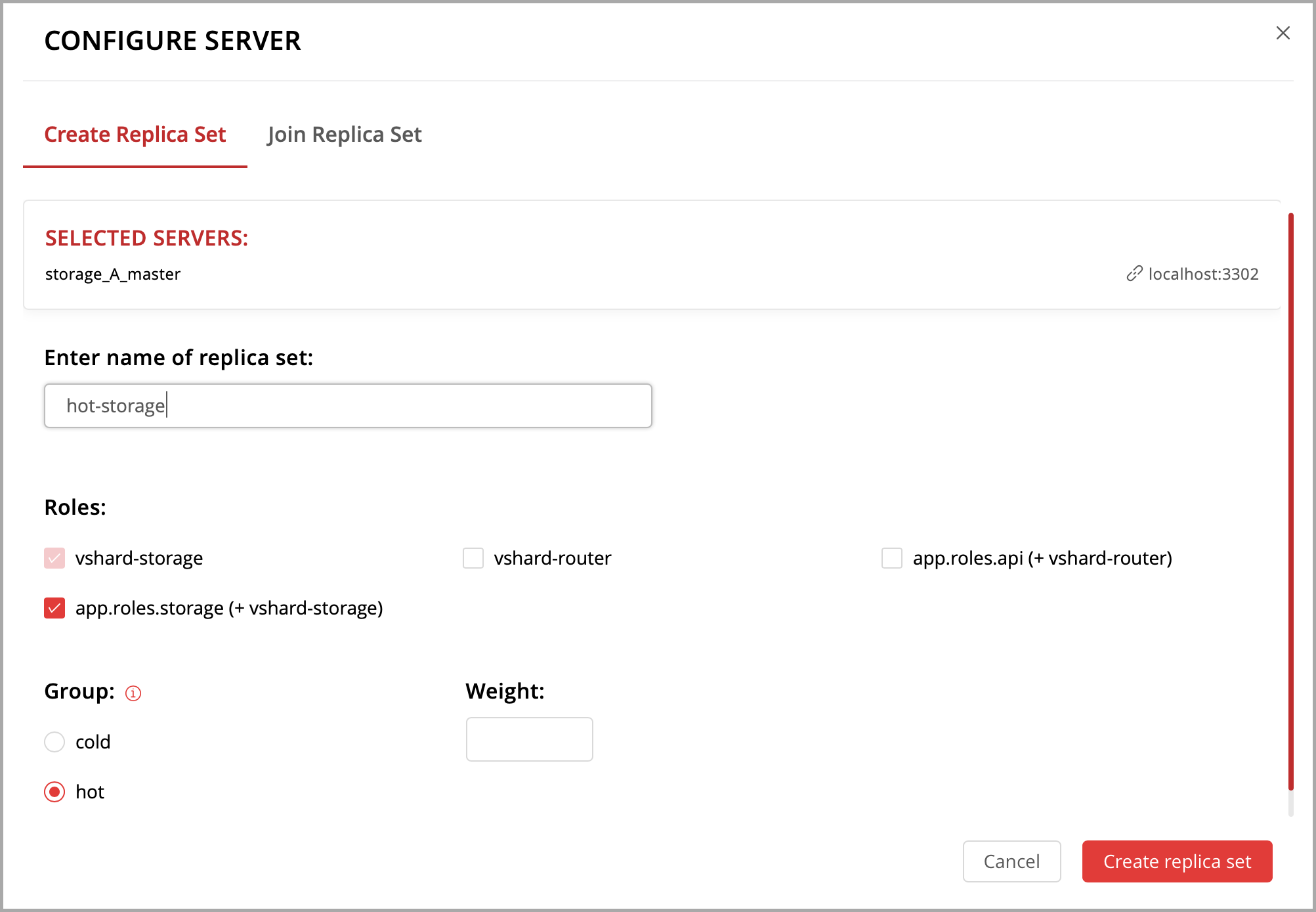

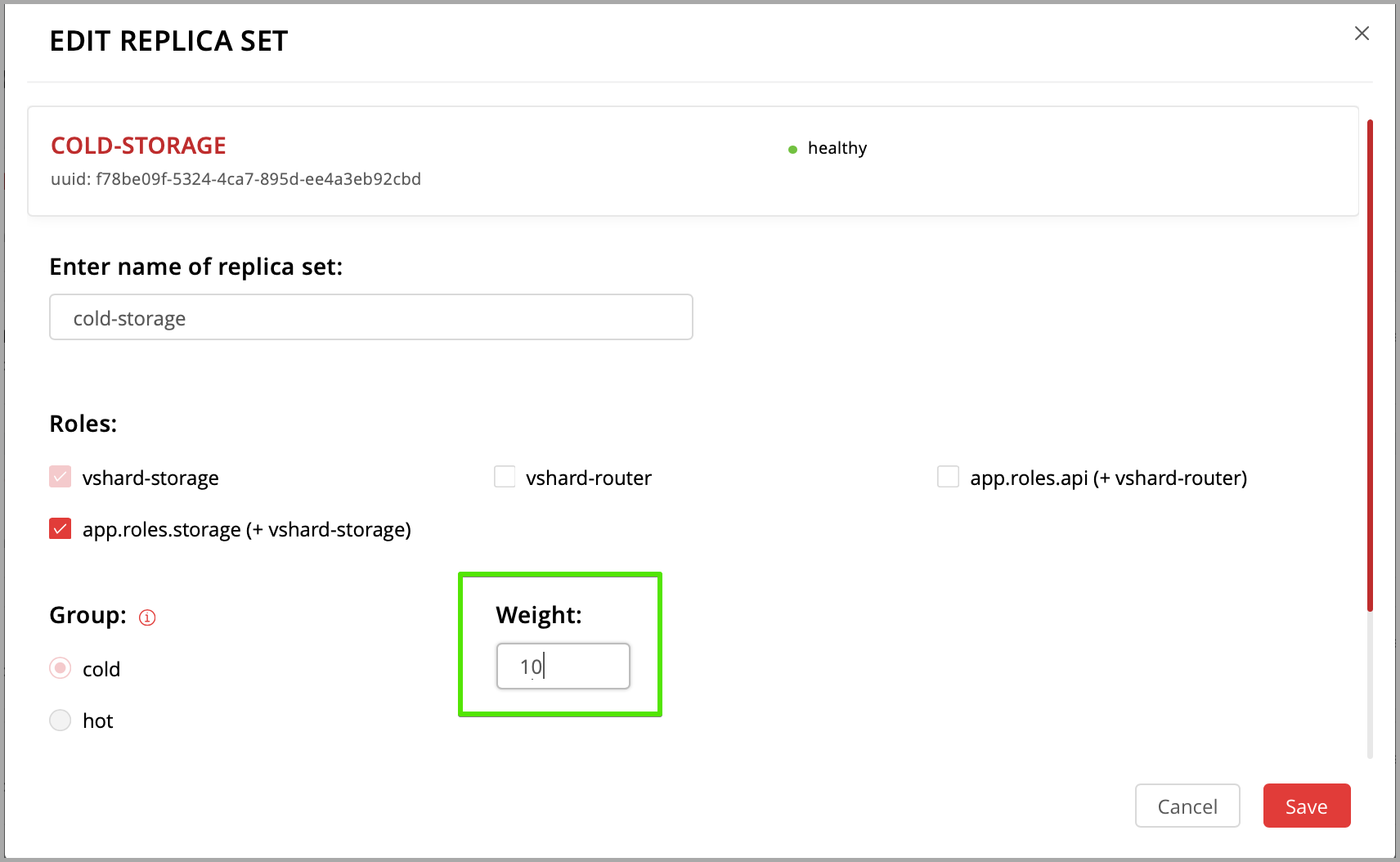

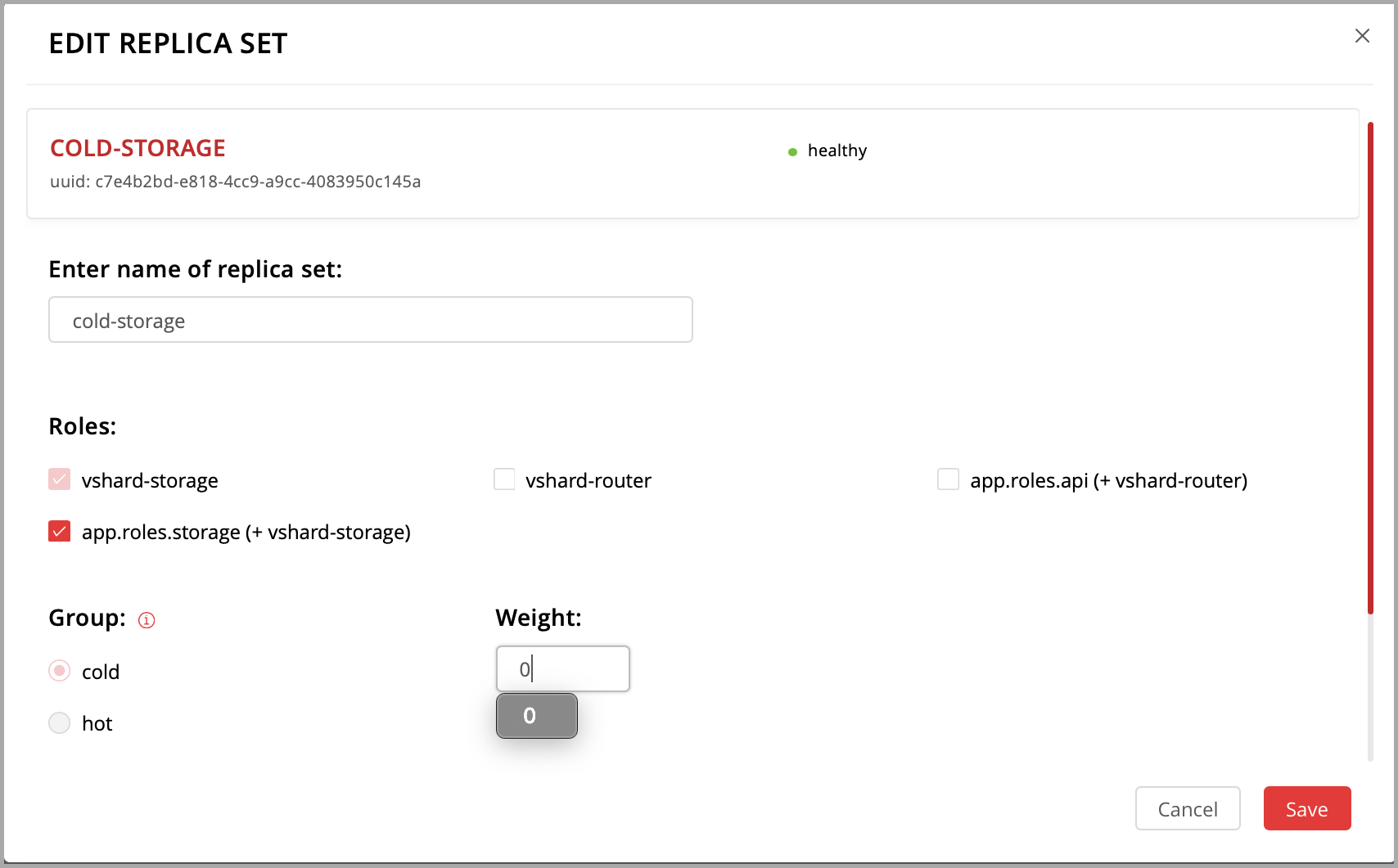

Instances (replicas) in a Tarantool Cartridge cluster are organized into replica sets. Roles are enabled per replica set, so all instances in a replica set have the same roles enabled.

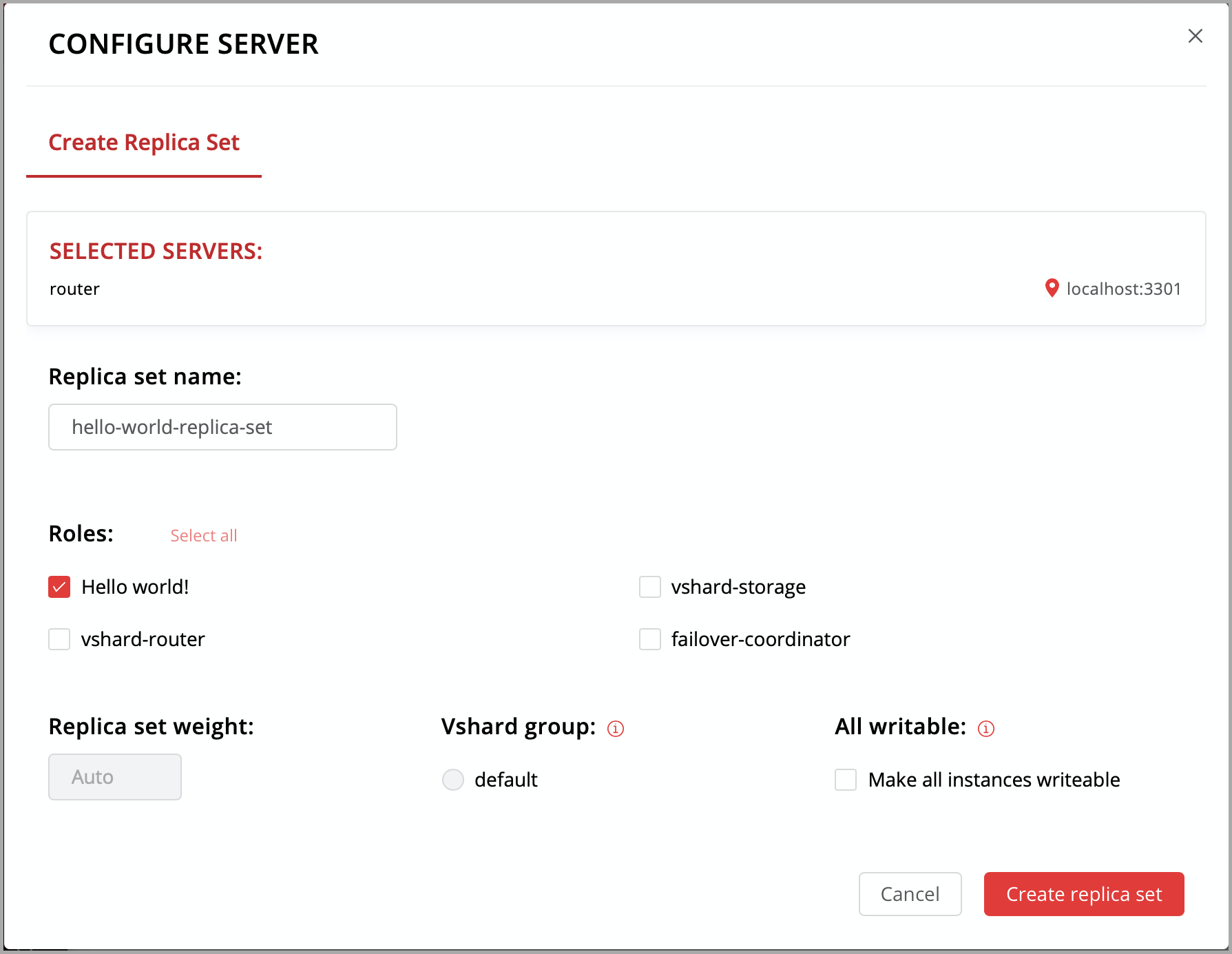

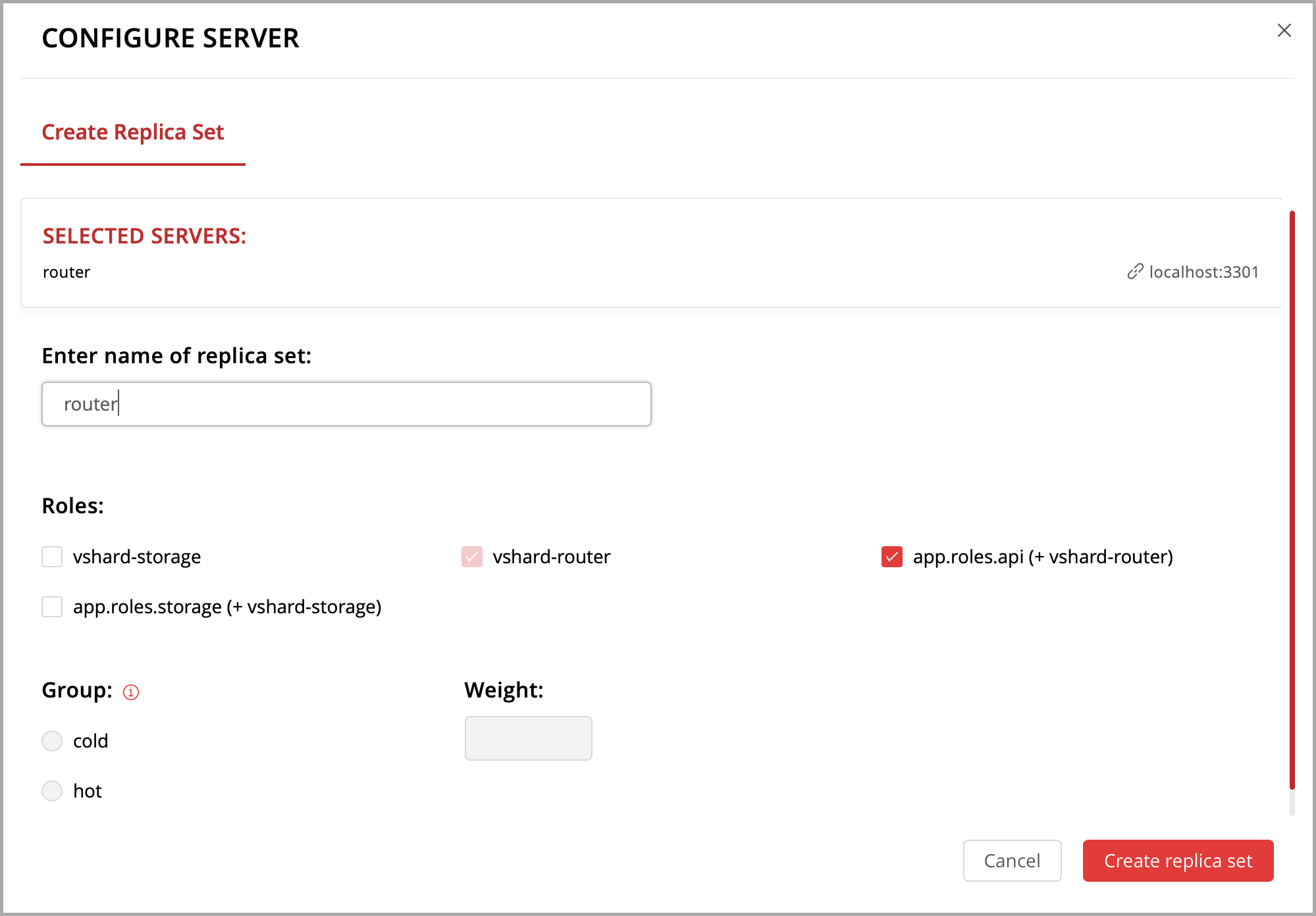

Let’s create a replica set containing just one instance and enable your role:

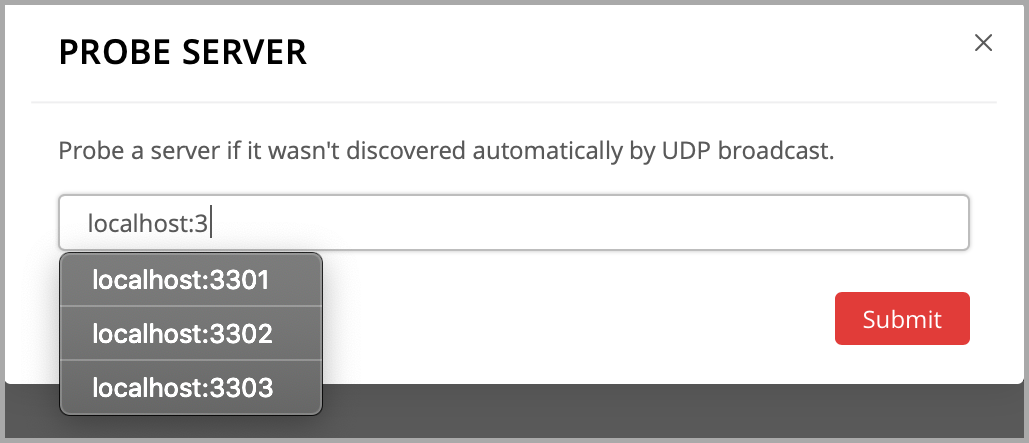

Open the cluster management web interface at http://localhost:8081.

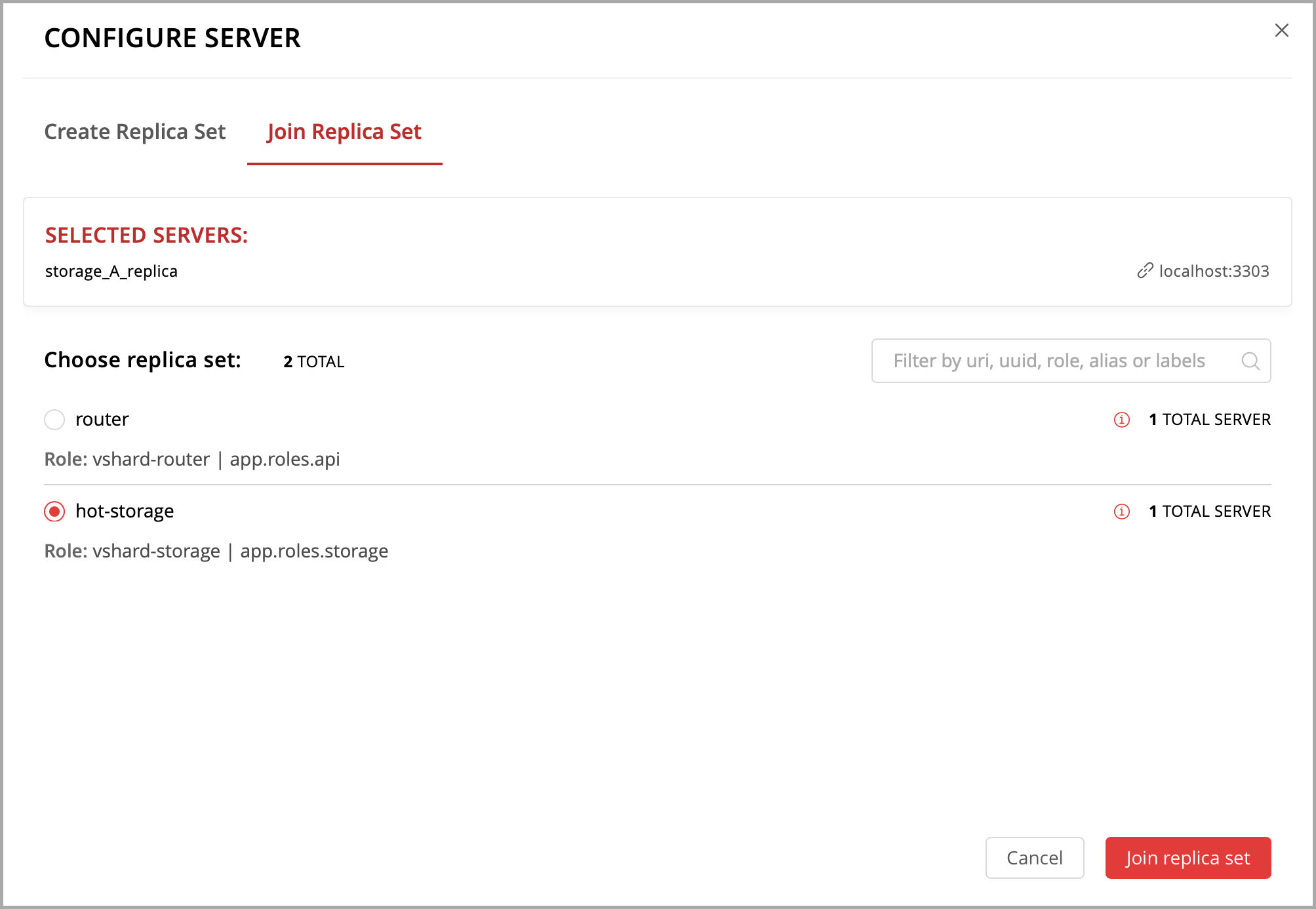

Click Configure.

Check the role

Hello world!to enable it. Notice that the role name here matches the label text that you specified in therole_nameparameter in thehello-world.luafile.(Optionally) Specify the replica set name, for example “hello-world-replica-set”.

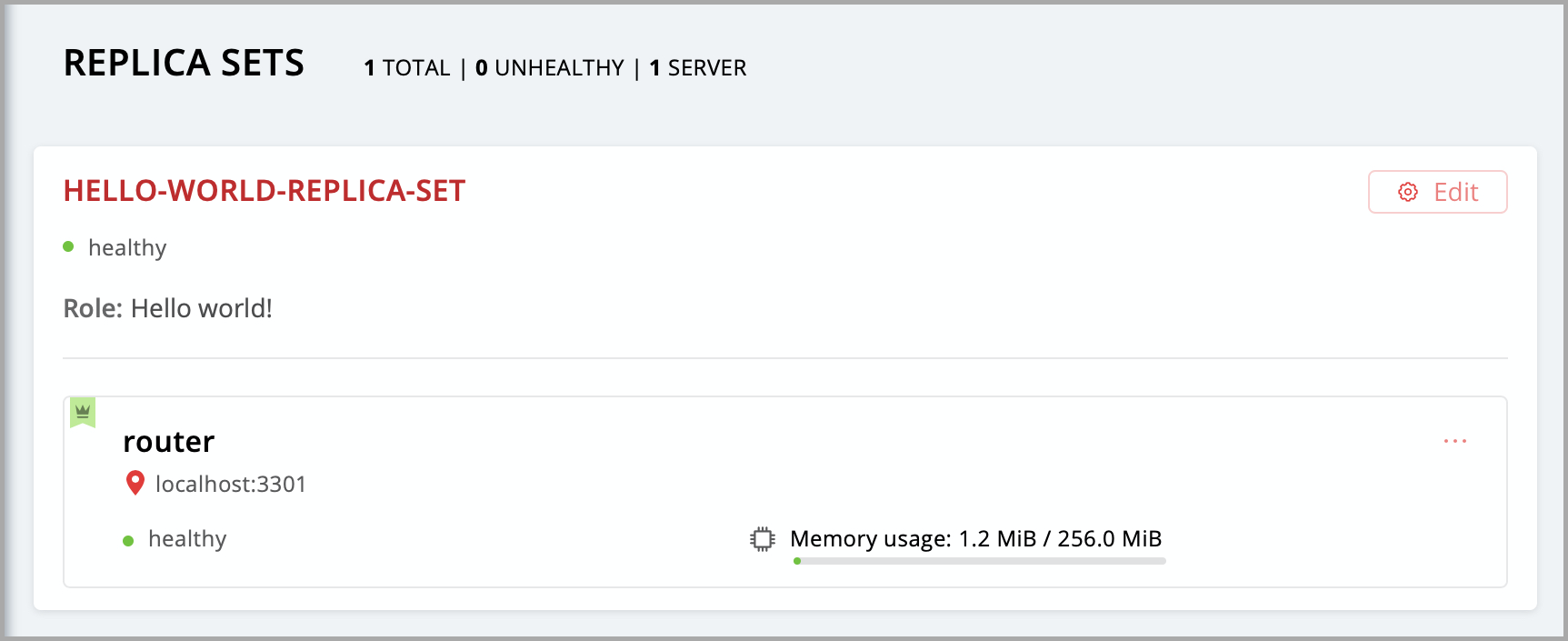

Click Create replica set and see the newly-created replica set in the web interface.

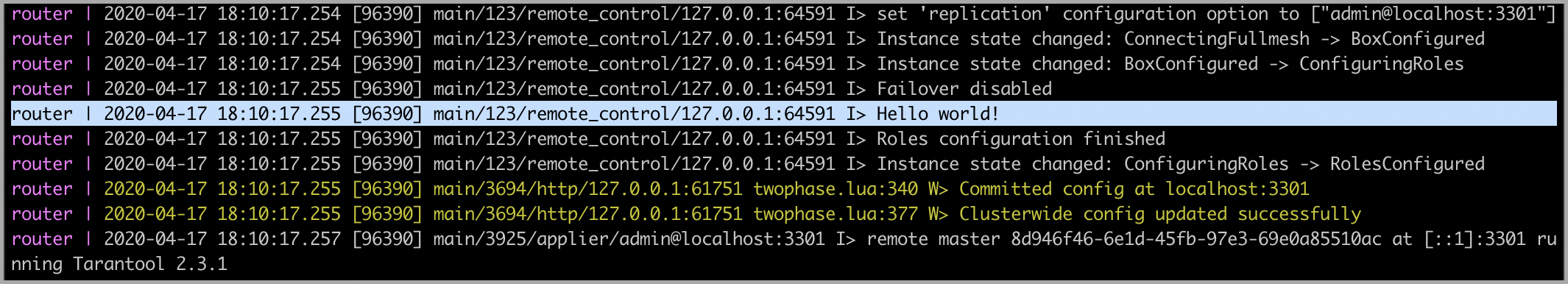

Your custom role got enabled. Find the “Hello world!” message in console, like this:

Finally, open the HTTP endpoint of this instance at http://localhost:8081/hello and see the reply to your GET request.

Everything is up and running! What’s next?

- Follow this guide to set up the rest of the cluster and try some cool cluster management features.

- Get inspired with these examples and implement more sophisticated business logic for your role.

- Pack your application for easy distribution. Choose what you like: a DEB or RPM package, a TGZ archive, or a Docker image.

User’s Guide¶

Preface¶

Welcome to Tarantool! This is the User’s Guide. We recommend reading it first, and consulting Reference materials for more detail afterwards, if needed.

How to read the documentation¶

To get started, you can install and launch Tarantool using a Docker container, a package manager, or the online Tarantool server at http://try.tarantool.org. Either way, as the first tryout, you can follow the introductory exercises from Chapter 2 “Getting started”. If you want more hands-on experience, proceed to Tutorials after you are through with Chapter 2.

Chapter 3 “Database” is about using Tarantool as a NoSQL DBMS, whereas Chapter 4 “Application server” is about using Tarantool as an application server.

Chapter 5 “Server administration” and Chapter 6 “Replication” are primarily for administrators.

Chapter 7 “Connectors” is strictly for users who are connecting from a different language such as C or Perl or Python — other users will find no immediate need for this chapter.

Chapter 8 “FAQ” gives answers to some frequently asked questions about Tarantool.

For experienced users, there are also Reference materials, a Contributor’s Guide and an extensive set of comments in the source code.

Getting in touch with the Tarantool community¶

Please report bugs or make feature requests at http://github.com/tarantool/tarantool/issues.

You can contact developers directly in telegram or in a Tarantool discussion group (English or Russian).

Conventions used in this manual¶

Square brackets [ and ] enclose optional syntax.

Two dots in a row .. mean the preceding tokens may be repeated.

A vertical bar | means the preceding and following tokens are mutually exclusive alternatives.

Database¶

In this chapter, we introduce the basic concepts of working with Tarantool as a database manager.

This chapter contains the following sections:

Data model¶

This section describes how Tarantool stores values and what operations with data it supports.

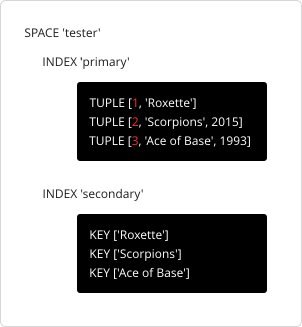

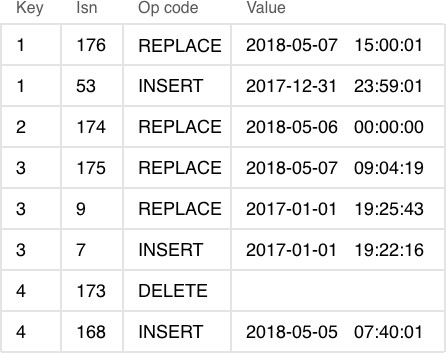

If you tried to create a database as suggested in our “Getting started” exercises, then your test database now looks like this:

Spaces¶

A space – ‘tester’ in our example – is a container.

When Tarantool is being used to store data, there is always at least one space. Each space has a unique name specified by the user. Besides, each space has a unique numeric identifier which can be specified by the user, but usually is assigned automatically by Tarantool. Finally, a space always has an engine: memtx (default) – in-memory engine, fast but limited in size, or vinyl – on-disk engine for huge data sets.

A space is a container for tuples. To be functional, it needs to have a primary index. It can also have secondary indexes.

Tuples¶

A tuple plays the same role as a “row” or a “record”, and the components of a tuple (which we call “fields”) play the same role as a “row column” or “record field”, except that:

- fields can be composite structures, such as arrays or maps, and

- fields don’t need to have names.

Any given tuple may have any number of fields, and the fields may be of

different types.

The identifier of a field is the field’s number, base 1

(in Lua and other 1-based languages) or base 0 (in PHP or C/C++).

For example, 1 or 0 can be used in some contexts to refer to the first

field of a tuple.

The number of tuples in a space is unlimited.

Tuples in Tarantool are stored as MsgPack arrays.

When Tarantool returns a tuple value in console, it uses the

YAML format,

for example: [3, 'Ace of Base', 1993].

Indexes¶

An index is a group of key values and pointers.

As with spaces, you should specify the index name, and let Tarantool come up with a unique numeric identifier (“index id”).

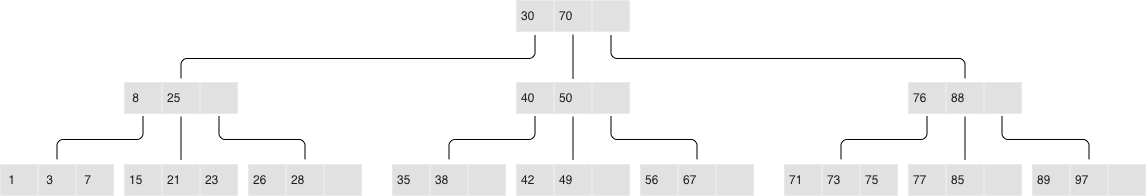

An index always has a type. The default index type is ‘TREE’. TREE indexes are provided by all Tarantool engines, can index unique and non-unique values, support partial key searches, comparisons and ordered results. Additionally, memtx engine supports HASH, RTREE and BITSET indexes.

An index may be multi-part, that is, you can declare that an index key value is composed of two or more fields in the tuple, in any order. For example, for an ordinary TREE index, the maximum number of parts is 255.

An index may be unique, that is, you can declare that it would be illegal to have the same key value twice.

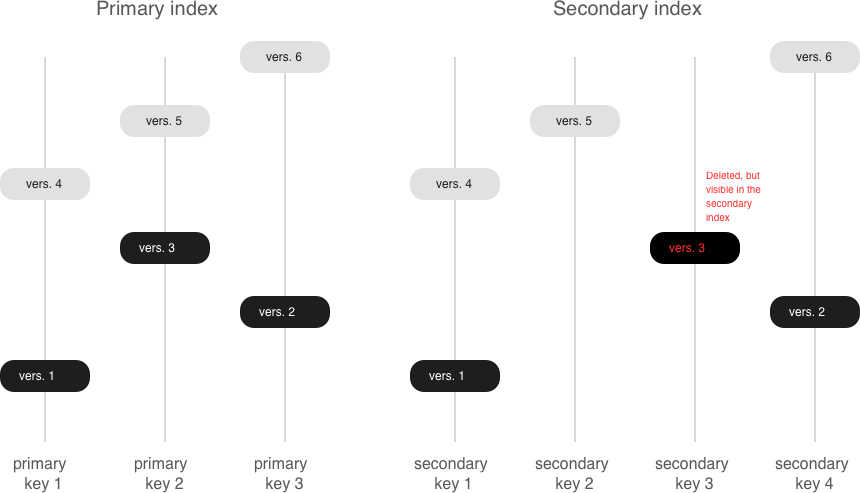

The first index defined on a space is called the primary key index, and it must be unique. All other indexes are called secondary indexes, and they may be non-unique.

An index definition may include identifiers of tuple fields and their expected types. See allowed indexed field types here.

Note

A recommended design pattern for a data model is to base primary keys on the first fields of a tuple, because this speeds up tuple comparison.

In our example, we first defined the primary index (named ‘primary’) based on field #1 of each tuple:

tarantool> i = s:create_index('primary', {type = 'hash', parts = {{field = 1, type = 'unsigned'}}}

The effect is that, for all tuples in space ‘tester’, field #1 must exist and must contain an unsigned integer. The index type is ‘hash’, so values in field #1 must be unique, because keys in HASH indexes are unique.

After that, we defined a secondary index (named ‘secondary’) based on field #2 of each tuple:

tarantool> i = s:create_index('secondary', {type = 'tree', parts = {2, 'string'}})

The effect is that, for all tuples in space ‘tester’, field #2 must exist and must contain a string. The index type is ‘tree’, so values in field #2 must not be unique, because keys in TREE indexes may be non-unique.

Note

Space definitions and index definitions are stored permanently in Tarantool’s system spaces _space and _index (for details, see reference on box.space submodule).

You can add, drop, or alter the definitions at runtime, with some restrictions. See syntax details in reference on box module.

Read more about index operations here.

Data types¶

Tarantool is both a database and an application server. Hence a developer often deals with two type sets: the programming language types (e.g. Lua) and the types of the Tarantool storage format (MsgPack).

Lua vs MsgPack¶

| Scalar / compound | MsgPack type | Lua type | Example value |

|---|---|---|---|

| scalar | nil | “nil” | msgpack.NULL |

| scalar | boolean | “boolean” | true |

| scalar | string | “string” | ‘A B C’ |

| scalar | integer | “number” | 12345 |

| scalar | double | “number” | 1.2345 |

| compound | map | “table” (with string keys) | {‘a’: 5, ‘b’: 6} |

| compound | array | “table” (with integer keys) | [1, 2, 3, 4, 5] |

| compound | array | tuple (“cdata”) | [12345, ‘A B C’] |

In Lua, a nil type has only one possible value, also called nil (displayed as null on Tarantool’s command line, since the output is in the YAML format). Nils may be compared to values of any types with == (is-equal) or ~= (is-not-equal), but other operations will not work. Nils may not be used in Lua tables; the workaround is to use msgpack.NULL

A boolean is either true or false.

A string is a variable-length sequence of bytes, usually represented with alphanumeric characters inside single quotes. In both Lua and MsgPack, strings are treated as binary data, with no attempts to determine a string’s character set or to perform any string conversion – unless there is an optional collation. So, usually, string sorting and comparison are done byte-by-byte, without any special collation rules applied. (Example: numbers are ordered by their point on the number line, so 2345 is greater than 500; meanwhile, strings are ordered by the encoding of the first byte, then the encoding of the second byte, and so on, so ‘2345’ is less than ‘500’.)

In Lua, a number is double-precision floating-point, but Tarantool allows both

integer and floating-point values. Tarantool will try to store a Lua number as

floating-point if the value contains a decimal point or is very large

(greater than 100 trillion = 1e14), otherwise Tarantool will store it as an integer.

To ensure that even very large numbers are stored as integers, use the

tonumber64 function, or the LL (Long Long) suffix,

or the ULL (Unsigned Long Long) suffix.

Here are examples of numbers using regular notation, exponential notation,

the ULL suffix and the tonumber64 function:

-55, -2.7e+20, 100000000000000ULL, tonumber64('18446744073709551615').

Lua tables with string keys are stored as MsgPack maps; Lua tables with integer keys starting with 1 – as MsgPack arrays. Nils may not be used in Lua tables; the workaround is to use msgpack.NULL

A tuple is a light reference to a MsgPack array stored in the database. It is a special type (cdata) to avoid conversion to a Lua table on retrieval. A few functions may return tables with multiple tuples. For more tuple examples, see box.tuple.

Note

Tarantool uses the MsgPack format for database storage, which is variable-length. So, for example, the smallest number requires only one byte, but the largest number requires nine bytes.

Examples of insert requests with different data types:

tarantool> box.space.K:insert{1,nil,true,'A B C',12345,1.2345}

---

- [1, null, true, 'A B C', 12345, 1.2345]

...

tarantool> box.space.K:insert{2,{['a']=5,['b']=6}}

---

- [2, {'a': 5, 'b': 6}]

...

tarantool> box.space.K:insert{3,{1,2,3,4,5}}

---

- [3, [1, 2, 3, 4, 5]]

...

Indexed field types¶

Indexes restrict values which Tarantool’s MsgPack may contain. This is why, for example, ‘unsigned’ is a separate indexed field type, compared to ‘integer’ data type in MsgPack: they both store ‘integer’ values, but an ‘unsigned’ index contains only non-negative integer values and an ‘integer’ index contains all integer values.

Here’s how Tarantool indexed field types correspond to MsgPack data types.

| Indexed field type | MsgPack data type (and possible values) |

Index type | Examples |

|---|---|---|---|

| unsigned (may also be called ‘uint’ or ‘num’, but ‘num’ is deprecated) | integer (integer between 0 and 18446744073709551615, i.e. about 18 quintillion) | TREE, BITSET or HASH | 123456 |

| integer (may also be called ‘int’) | integer (integer between -9223372036854775808 and 18446744073709551615) | TREE or HASH | -2^63 |

| number | integer (integer between -9223372036854775808 and 18446744073709551615) double (single-precision floating point number or double-precision floating point number) |

TREE or HASH | 1.234 -44 1.447e+44 |

| string (may also be called ‘str’) | string (any set of octets, up to the maximum length) | TREE, BITSET or HASH | ‘A B C’ ‘65 66 67’ |

| boolean | bool (true or false) | TREE or HASH | true |

| array | array (list of numbers representing points in a geometric figure) | RTREE | {10, 11} {3, 5, 9, 10} |

| scalar | bool (true or false) integer (integer between -9223372036854775808 and 18446744073709551615) double (single-precision floating point number or double-precision floating point number) string (any set of octets) Note: When there is a mix of types, the key order is: booleans, then numbers, then strings. |

TREE or HASH | true -1 1.234 ‘’ ‘ру’ |

Collations¶

By default, when Tarantool compares strings, it uses what we call a

“binary” collation. The only consideration here is the numeric value

of each byte in the string. Therefore, if the string is encoded

with ASCII or UTF-8, then 'A' < 'B' < 'a', because the encoding of ‘A’

(what used to be called the “ASCII value”) is 65, the encoding of

‘B’ is 66, and the encoding of ‘a’ is 98. Binary collation is best

if you prefer fast deterministic simple maintenance and searching

with Tarantool indexes.

But if you want the ordering that you see in phone books and dictionaries,

then you need Tarantool’s optional collations – unicode and

unicode_ci – that allow for 'a' < 'A' < 'B' and 'a' = 'A' < 'B'

respectively.

Optional collations use the ordering according to the Default Unicode Collation Element Table (DUCET) and the rules described in Unicode® Technical Standard #10 Unicode Collation Algorithm (UTS #10 UCA). The only difference between the two collations is about weights:

unicodecollation observes L1 and L2 and L3 weights (strength = ‘tertiary’),unicode_cicollation observes only L1 weights (strength = ‘primary’), so for example ‘a’ = ‘A’ = ‘á’ = ‘Á’.

As an example, let’s take some Russian words:

'ЕЛЕ'

'елейный'

'ёлка'

'еловый'

'елозить'

'Ёлочка'

'ёлочный'

'ЕЛь'

'ель'

…and show the difference in ordering and selecting by index:

with

unicodecollation:tarantool> box.space.T:create_index('I', {parts = {{1,'str', collation='unicode'}}}) ... tarantool> box.space.T.index.I:select() --- - - ['ЕЛЕ'] - ['елейный'] - ['ёлка'] - ['еловый'] - ['елозить'] - ['Ёлочка'] - ['ёлочный'] - ['ель'] - ['ЕЛь'] ... tarantool> box.space.T.index.I:select{'ЁлКа'} --- - [] ...

with

unicode_cicollation:tarantool> box.space.T:create_index('I', {parts = {{1,'str', collation='unicode_ci'}}}) ... tarantool> box.space.S.index.I:select() --- - - ['ЕЛЕ'] - ['елейный'] - ['ёлка'] - ['еловый'] - ['елозить'] - ['Ёлочка'] - ['ёлочный'] - ['ЕЛь'] ... tarantool> box.space.S.index.I:select{'ЁлКа'} --- - - ['ёлка'] ...

In fact, though, good collation involves much more than these simple examples of upper case / lower case and accented / unaccented equivalence in alphabets. We also consider variations of the same character, non-alphabetic writing systems, and special rules that apply for combinations of characters.

Sequences¶

A sequence is a generator of ordered integer values.

As with spaces and indexes, you should specify the sequence name, and let Tarantool come up with a unique numeric identifier (“sequence id”).

As well, you can specify several options when creating a new sequence. The options determine what value will be generated whenever the sequence is used.

Options for box.schema.sequence.create()¶

| Option name | Type and meaning | Default | Examples |

|---|---|---|---|

| start | Integer. The value to generate the first time a sequence is used | 1 | start=0 |

| min | Integer. Values smaller than this cannot be generated | 1 | min=-1000 |

| max | Integer. Values larger than this cannot be generated | 9223372036854775807 | max=0 |

| cycle | Boolean. Whether to start again when values cannot be generated | false | cycle=true |

| cache | Integer. The number of values to store in a cache | 0 | cache=0 |

| step | Integer. What to add to the previous generated value, when generating a new value | 1 | step=-1 |

| if_not_exists | Boolean. If this is true and a sequence with this name exists already, ignore other options and use the existing values | false | if_not_exists=true |

Once a sequence exists, it can be altered, dropped, reset, forced to generate the next value, or associated with an index.

For an initial example, we generate a sequence named ‘S’.

tarantool> box.schema.sequence.create('S',{min=5, start=5})

---

- step: 1

id: 5

min: 5

cache: 0

uid: 1

max: 9223372036854775807

cycle: false

name: S

start: 5

...

The result shows that the new sequence has all default values,

except for the two that were specified, min and start.

Then we get the next value, with the next() function.

tarantool> box.sequence.S:next()

---

- 5

...

The result is the same as the start value. If we called next()

again, we would get 6 (because the previous value plus the

step value is 6), and so on.

Then we create a new table, and say that its primary key may be generated from the sequence.

tarantool> s=box.schema.space.create('T');s:create_index('I',{sequence='S'})

---

...

Then we insert a tuple, without specifying a value for the primary key.

tarantool> box.space.T:insert{nil,'other stuff'}

---

- [6, 'other stuff']

...

The result is a new tuple where the first field has a value of 6. This arrangement, where the system automatically generates the values for a primary key, is sometimes called “auto-incrementing” or “identity”.

For syntax and implementation details, see the reference for box.schema.sequence.

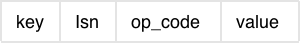

Persistence¶

In Tarantool, updates to the database are recorded in the so-called write ahead log (WAL) files. This ensures data persistence. When a power outage occurs or the Tarantool instance is killed incidentally, the in-memory database is lost. In this situation, WAL files are used to restore the data. Namely, Tarantool reads the WAL files and redoes the requests (this is called the “recovery process”). You can change the timing of the WAL writer, or turn it off, by setting wal_mode.

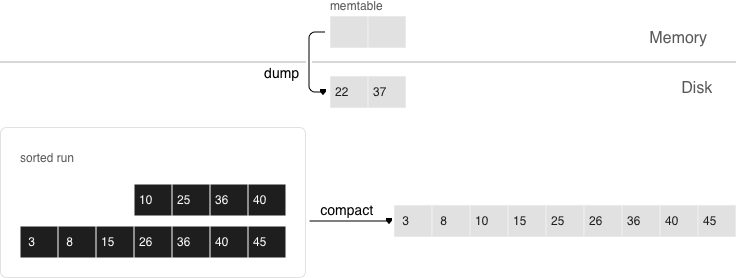

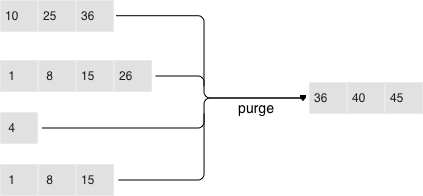

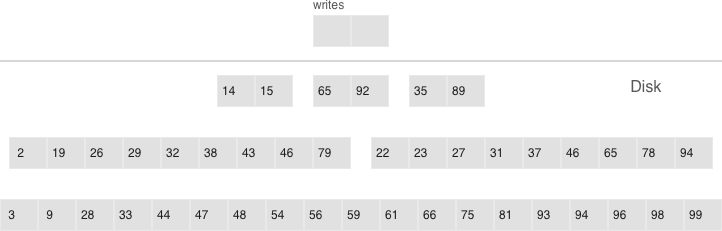

Tarantool also maintains a set of snapshot files. These files contain an on-disk copy of the entire data set for a given moment. Instead of reading every WAL file since the databases were created, the recovery process can load the latest snapshot file and then read only those WAL files that were produced after the snapshot file was made. After checkpointing, old WAL files can be removed to free up space.

To force immediate creation of a snapshot file, you can use Tarantool’s box.snapshot() request. To enable automatic creation of snapshot files, you can use Tarantool’s checkpoint daemon. The checkpoint daemon sets intervals for forced checkpoints. It makes sure that the states of both memtx and vinyl storage engines are synchronized and saved to disk, and automatically removes old WAL files.

Snapshot files can be created even if there is no WAL file.

Note

The memtx engine makes only regular checkpoints with the interval set in checkpoint daemon configuration.

The vinyl engine runs checkpointing in the background at all times.

See the Internals section for more details about the WAL writer and the recovery process.

Operations¶

Data operations¶

The basic data operations supported in Tarantool are:

- five data-manipulation operations (INSERT, UPDATE, UPSERT, DELETE, REPLACE), and

- one data-retrieval operation (SELECT).

All of them are implemented as functions in box.space submodule.

Examples:

INSERT: Add a new tuple to space ‘tester’.

The first field, field[1], will be 999 (MsgPack type is

integer).The second field, field[2], will be ‘Taranto’ (MsgPack type is

string).tarantool> box.space.tester:insert{999, 'Taranto'}

UPDATE: Update the tuple, changing field field[2].

The clause “{999}”, which has the value to look up in the index of the tuple’s primary-key field, is mandatory, because

update()requests must always have a clause that specifies a unique key, which in this case is field[1].The clause “{{‘=’, 2, ‘Tarantino’}}” specifies that assignment will happen to field[2] with the new value.

tarantool> box.space.tester:update({999}, {{'=', 2, 'Tarantino'}})

UPSERT: Upsert the tuple, changing field field[2] again.

The syntax of

upsert()is similar to the syntax ofupdate(). However, the execution logic of these two requests is different. UPSERT is either UPDATE or INSERT, depending on the database’s state. Also, UPSERT execution is postponed until after transaction commit, so, unlikeupdate(),upsert()doesn’t return data back.tarantool> box.space.tester:upsert({999, 'Taranted'}, {{'=', 2, 'Tarantism'}})

REPLACE: Replace the tuple, adding a new field.

This is also possible with the

update()request, but theupdate()request is usually more complicated.tarantool> box.space.tester:replace{999, 'Tarantella', 'Tarantula'}

SELECT: Retrieve the tuple.

The clause “{999}” is still mandatory, although it does not have to mention the primary key.

tarantool> box.space.tester:select{999}

DELETE: Delete the tuple.

In this example, we identify the primary-key field.

tarantool> box.space.tester:delete{999}

Summarizing the examples:

- Functions

insertandreplaceaccept a tuple (where a primary key comes as part of the tuple). - Function

upsertaccepts a tuple (where a primary key comes as part of the tuple), and also the update operations to execute. - Function

deleteaccepts a full key of any unique index (primary or secondary). - Function

updateaccepts a full key of any unique index (primary or secondary), and also the operations to execute. - Function

selectaccepts any key: primary/secondary, unique/non-unique, full/partial.

See reference on box.space for more

details on using data operations.

Note

Besides Lua, you can use Perl, PHP, Python or other programming language connectors. The client server protocol is open and documented. See this annotated BNF.

Index operations¶

Index operations are automatic: if a data-manipulation request changes a tuple, then it also changes the index keys defined for the tuple.

The simple index-creation operation that we’ve illustrated before is:

box.space.space-name:create_index('index-name')

This creates a unique TREE index on the first field of all tuples (often called “Field#1”), which is assumed to be numeric.

The simple SELECT request that we’ve illustrated before is:

box.space.space-name:select(value)

This looks for a single tuple via the first index. Since the first index

is always unique, the maximum number of returned tuples will be: one.

You can call select() without arguments, causing all tuples to be returned.

Let’s continue working with the space ‘tester’ created in the “Getting started” exercises but first modify it:

tarantool> box.space.tester:format({

> {name = 'id', type = 'unsigned'},

> {name = 'band_name', type = 'string'},

> {name = 'year', type = 'unsigned'},

> {name = 'rate', type = 'unsigned', is_nullable=true}})

---

...

Add the rate to the tuple #1 and #2:

tarantool> box.space.tester:update(1, {{'=', 4, 5}})

---

- [1, 'Roxette', 1986, 5]

...

tarantool> box.space.tester:update(2, {{'=', 4, 4}})

---

- [2, 'Scorpions', 2015, 4]

...

And insert another tuple:

tarantool> box.space.tester:insert({4, 'Roxette', 2016, 3})

---

- [4, 'Roxette', 2016, 3]

...

The existing SELECT variations:

- The search can use comparisons other than equality.

tarantool> box.space.tester:select(1, {iterator = 'GT'})

---

- - [2, 'Scorpions', 2015, 4]

- [3, 'Ace of Base', 1993]

- [4, 'Roxette', 2016, 3]

...

The comparison operators are LT, LE, EQ, REQ, GE, GT (for “less than”, “less than or equal”, “equal”, “reversed equal”, “greater than or equal”, “greater than” respectively). Comparisons make sense if and only if the index type is ‘TREE’.

This type of search may return more than one tuple; if so, the tuples will be in descending order by key when the comparison operator is LT or LE or REQ, otherwise in ascending order.

- The search can use a secondary index.

For a primary-key search, it is optional to specify an index name. For a secondary-key search, it is mandatory.

tarantool> box.space.tester:create_index('secondary', {parts = {{field=3, type='unsigned'}}})

---

- unique: true

parts:

- type: unsigned

is_nullable: false

fieldno: 3

id: 2

space_id: 512

type: TREE

name: secondary

...

tarantool> box.space.tester.index.secondary:select({1993})

---

- - [3, 'Ace of Base', 1993]

...

- The search may be for some key parts starting with the prefix of the key. Notice that partial key searches are available only in TREE indexes.

-- Create an index with three parts

tarantool> box.space.tester:create_index('tertiary', {parts = {{field = 2, type = 'string'}, {field=3, type='unsigned'}, {field=4, type='unsigned'}}})

---

- unique: true

parts:

- type: string

is_nullable: false

fieldno: 2

- type: unsigned

is_nullable: false

fieldno: 3

- type: unsigned

is_nullable: true

fieldno: 4

id: 6

space_id: 513

type: TREE

name: tertiary

...

-- Make a partial search

tarantool> box.space.tester.index.tertiary:select({'Scorpions', 2015})

---

- - [2, 'Scorpions', 2015, 4]

...

- The search may be for all fields, using a table for the value:

tarantool> box.space.tester.index.tertiary:select({'Roxette', 2016, 3})

---

- - [4, 'Roxette', 2016, 3]

...

or the search can be for one field, using a table or a scalar:

tarantool> box.space.tester.index.tertiary:select({'Roxette'})

---

- - [1, 'Roxette', 1986, 5]

- [4, 'Roxette', 2016, 3]

...

Working with BITSET and RTREE¶

BITSET example:

tarantool> box.schema.space.create('bitset_example')

tarantool> box.space.bitset_example:create_index('primary')

tarantool> box.space.bitset_example:create_index('bitset',{unique=false,type='BITSET', parts={2,'unsigned'}})

tarantool> box.space.bitset_example:insert{1,1}

tarantool> box.space.bitset_example:insert{2,4}

tarantool> box.space.bitset_example:insert{3,7}

tarantool> box.space.bitset_example:insert{4,3}

tarantool> box.space.bitset_example.index.bitset:select(2, {iterator='BITS_ANY_SET'})

The result will be:

---

- - [3, 7]

- [4, 3]

...

because (7 AND 2) is not equal to 0, and (3 AND 2) is not equal to 0.

RTREE example:

tarantool> box.schema.space.create('rtree_example')

tarantool> box.space.rtree_example:create_index('primary')

tarantool> box.space.rtree_example:create_index('rtree',{unique=false,type='RTREE', parts={2,'ARRAY'}})

tarantool> box.space.rtree_example:insert{1, {3, 5, 9, 10}}

tarantool> box.space.rtree_example:insert{2, {10, 11}}

tarantool> box.space.rtree_example.index.rtree:select({4, 7, 5, 9}, {iterator = 'GT'})

The result will be:

---

- - [1, [3, 5, 9, 10]]

...

because a rectangle whose corners are at coordinates 4,7,5,9 is entirely

within a rectangle whose corners are at coordinates 3,5,9,10.

Additionally, there exist index iterator operations. They can only be used with code in Lua and C/C++. Index iterators are for traversing indexes one key at a time, taking advantage of features that are specific to an index type, for example evaluating Boolean expressions when traversing BITSET indexes, or going in descending order when traversing TREE indexes.

See also other index operations like alter() (modify index) and drop() (delete index) in reference for Submodule box.index.

Complexity factors¶

In reference for box.space and Submodule box.index submodules, there are notes about which complexity factors might affect the resource usage of each function.

| Complexity factor | Effect |

|---|---|

| Index size | The number of index keys is the same as the number of tuples in the data set. For a TREE index, if there are more keys, then the lookup time will be greater, although of course the effect is not linear. For a HASH index, if there are more keys, then there is more RAM used, but the number of low-level steps tends to remain constant. |

| Index type | Typically, a HASH index is faster than a TREE index if the number of tuples in the space is greater than one. |

| Number of indexes accessed | Ordinarily, only one index is accessed to retrieve one tuple. But to update the tuple, there must be N accesses if the space has N different indexes. Note re storage engine: Vinyl optimizes away such accesses if secondary index fields are unchanged by the update. So, this complexity factor applies only to memtx, since it always makes a full-tuple copy on every update. |

| Number of tuples accessed | A few requests, for example SELECT, can retrieve multiple tuples. This factor is usually less important than the others. |

| WAL settings | The important setting for the write-ahead log is wal_mode. If the setting causes no writing or delayed writing, this factor is unimportant. If the setting causes every data-change request to wait for writing to finish on a slow device, this factor is more important than all the others. |

Transactions¶

Transactions in Tarantool occur in fibers on a single thread. That is why Tarantool has a guarantee of execution atomicity. That requires emphasis.

Threads, fibers and yields¶

How does Tarantool process a basic operation? As an example, let’s take this query:

tarantool> box.space.tester:update({3}, {{'=', 2, 'size'}, {'=', 3, 0}})

This is equivalent to the following SQL statement for a table that stores

primary keys in field[1]:

UPDATE tester SET "field[2]" = 'size', "field[3]" = 0 WHERE "field[1]" = 3

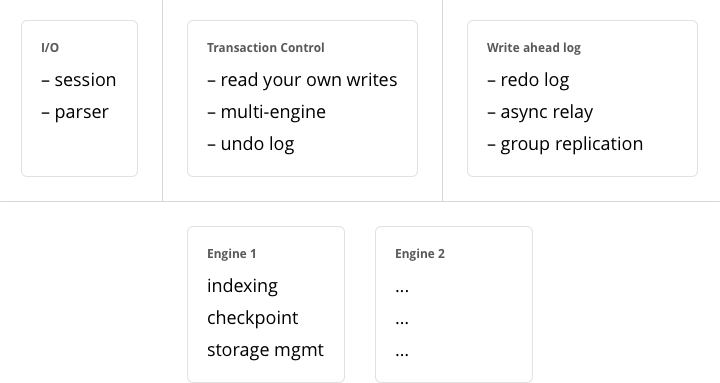

Assuming this query is received by Tarantool via network, it will be processed with three operating system threads:

The network thread on the server side receives the query, parses the statement, checks if it’s correct, and then transforms it into a special structure–a message containing an executable statement and its options.

The network thread ships this message to the instance’s transaction processor thread using a lock-free message bus. Lua programs execute directly in the transaction processor thread, and do not require parsing and preparation.

The instance’s transaction processor thread uses the primary-key index on field[1] to find the location of the tuple. It determines that the tuple can be updated (not much can go wrong when you’re merely changing an unindexed field value).

The transaction processor thread sends a message to the write-ahead logging (WAL) thread to commit the transaction. When done, the WAL thread replies with a COMMIT or ROLLBACK result to the transaction processor which gives it back to the network thread, and the network thread returns the result to the client.

Notice that there is only one transaction processor thread in Tarantool. Some people are used to the idea that there can be multiple threads operating on the database, with (say) thread #1 reading row #x, while thread #2 writes row #y. With Tarantool, no such thing ever happens. Only the transaction processor thread can access the database, and there is only one transaction processor thread for each Tarantool instance.

Like any other Tarantool thread, the transaction processor thread can handle many fibers. A fiber is a set of computer instructions that may contain “yield” signals. The transaction processor thread will execute all computer instructions until a yield, then switch to execute the instructions of a different fiber. Thus (say) the thread reads row #x for the sake of fiber #1, then writes row #y for the sake of fiber #2.

Yields must happen, otherwise the transaction processor thread would stick permanently on the same fiber. There are two types of yields:

- implicit yields: every data-change operation or network-access causes an implicit yield, and every statement that goes through the Tarantool client causes an implicit yield.

- explicit yields: in a Lua function, you can (and should) add “yield” statements to prevent hogging. This is called cooperative multitasking.

Cooperative multitasking¶

Cooperative multitasking means: unless a running fiber deliberately yields control, it is not preempted by some other fiber. But a running fiber will deliberately yield when it encounters a “yield point”: a transaction commit, an operating system call, or an explicit “yield” request. Any system call which can block will be performed asynchronously, and any running fiber which must wait for a system call will be preempted, so that another ready-to-run fiber takes its place and becomes the new running fiber.

This model makes all programmatic locks unnecessary: cooperative multitasking ensures that there will be no concurrency around a resource, no race conditions, and no memory consistency issues. The way to achieve this is quite simple: in critical sections, don’t use yields, explicit or implicit, and no one can interfere into the code execution.

When requests are small, for example simple UPDATE or INSERT or DELETE or SELECT, fiber scheduling is fair: it takes only a little time to process the request, schedule a disk write, and yield to a fiber serving the next client.

However, a function might perform complex computations or might be written in such a way that yields do not occur for a long time. This can lead to unfair scheduling, when a single client throttles the rest of the system, or to apparent stalls in request processing. Avoiding this situation is the responsibility of the function’s author.

Transactions¶

In the absence of transactions, any function that contains yield points may see changes in the database state caused by fibers that preempt. Multi-statement transactions exist to provide isolation: each transaction sees a consistent database state and commits all its changes atomically. At commit time, a yield happens and all transaction changes are written to the write ahead log in a single batch. Or, if needed, transaction changes can be rolled back – completely or to a specific savepoint.

In Tarantool, transaction isolation level is serializable with the clause “if no failure during writing to WAL”. In case of such a failure that can happen, for example, if the disk space is over, the transaction isolation level becomes read uncommitted.

In vynil, to implement isolation Tarantool uses a simple optimistic scheduler: the first transaction to commit wins. If a concurrent active transaction has read a value modified by a committed transaction, it is aborted.

The cooperative scheduler ensures that, in absence of yields, a multi-statement transaction is not preempted and hence is never aborted. Therefore, understanding yields is essential to writing abort-free code.

Sometimes while testing the transaction mechanism in Tarantool you can notice

that yielding after box.begin() but before any read/write operation does not

cause an abort as it should according to the description. This happens because

actually box.begin() does not start a transaction. It is a mark telling

Tarantool to start a transaction after some database request that follows.

In memtx, if an instruction that implies yields, explicit or implicit, is executed during a transaction, the transaction is fully rolled back. In vynil, we use more complex transactional manager that allows yields.

Note

You can’t mix storage engines in a transaction today.

Implicit yields¶

The only explicit yield requests in Tarantool are fiber.sleep() and fiber.yield(), but many other requests “imply” yields because Tarantool is designed to avoid blocking.

Database requests imply yields if and only if there is disk I/O. For memtx, since all data is in memory, there is no disk I/O during a read request. For vinyl, since some data may not be in memory, there may be disk I/O for a read (to fetch data from disk) or for a write (because a stall may occur while waiting for memory to be free). For both memtx and vinyl, since data-change requests must be recorded in the WAL, there is normally a commit. A commit happens automatically after every request in default “autocommit” mode, or a commit happens at the end of a transaction in “transaction” mode, when a user deliberately commits by calling box.commit(). Therefore for both memtx and vinyl, because there can be disk I/O, some database operations may imply yields.

Many functions in modules fio, net_box, console and socket (the “os” and “network” requests) yield.

That is why executing separate commands such as select(), insert(),

update() in the console inside a transaction will cause an abort. This is

due to implicit yield happening after each chunk of code is executed in the console.

Example #1

- Engine = memtx

The sequenceselect() insert()has one yield, at the end of insertion, caused by implicit commit;select()has nothing to write to the WAL and so does not yield. - Engine = vinyl

The sequenceselect() insert()has one to three yields, sinceselect()may yield if the data is not in cache,insert()may yield waiting for available memory, and there is an implicit yield at commit. - The sequence

begin() insert() insert() commit()yields only at commit if the engine is memtx, and can yield up to 3 times if the engine is vinyl.

Example #2

Assume that in the memtx space ‘tester’ there are tuples in which the third field represents a positive dollar amount. Let’s start a transaction, withdraw from tuple#1, deposit in tuple#2, and end the transaction, making its effects permanent.

tarantool> function txn_example(from, to, amount_of_money)

> box.begin()

> box.space.tester:update(from, {{'-', 3, amount_of_money}})

> box.space.tester:update(to, {{'+', 3, amount_of_money}})

> box.commit()

> return "ok"

> end

---

...

tarantool> txn_example({999}, {1000}, 1.00)

---

- "ok"

...

If wal_mode = ‘none’, then implicit yielding at commit time does not take place, because there are no writes to the WAL.

If a task is interactive – sending requests to the server and receiving responses – then it involves network I/O, and therefore there is an implicit yield, even if the request that is sent to the server is not itself an implicit yield request. Therefore, the following sequence

conn.space.test:select{1}

conn.space.test:select{2}

conn.space.test:select{3}

causes yields three times sequentially when sending requests to the network and awaiting the results. On the server side, the same requests are executed in common order possibly mixing with other requests from the network and local fibers. Something similar happens when using clients that operate via telnet, via one of the connectors, or via the MySQL and PostgreSQL rocks, or via the interactive mode when using Tarantool as a client.

After a fiber has yielded and then has regained control, it immediately issues testcancel.

Access control¶

Understanding security details is primarily an issue for administrators. However, ordinary users should at least skim this section to get an idea of how Tarantool makes it possible for administrators to prevent unauthorized access to the database and to certain functions.

Briefly:

- There is a method to guarantee with password checks that users really are who they say they are (“authentication”).

- There is a _user system space, where usernames and password-hashes are stored.

- There are functions for saying that certain users are allowed to do certain things (“privileges”).

- There is a _priv system space, where privileges are stored. Whenever a user tries to do an operation, there is a check whether the user has the privilege to do the operation (“access control”).

Details follow.

Users¶

There is a current user for any program working with Tarantool, local or remote. If a remote connection is using a binary port, the current user, by default, is ‘guest’. If the connection is using an admin-console port, the current user is ‘admin’. When executing a Lua initialization script, the current user is also ‘admin’.

The current user name can be found with box.session.user().

The current user can be changed:

- For a binary port connection – with the AUTH protocol command, supported by most clients;

- For an admin-console connection and in a Lua initialization script – with box.session.su();

- For a binary-port connection invoking a stored function with the CALL command – if the SETUID property is enabled for the function, Tarantool temporarily replaces the current user with the function’s creator, with all the creator’s privileges, during function execution.

Passwords¶

Each user (except ‘guest’) may have a password. The password is any alphanumeric string.

Tarantool passwords are stored in the _user system space with a cryptographic hash function so that, if the password is ‘x’, the stored hash-password is a long string like ‘lL3OvhkIPOKh+Vn9Avlkx69M/Ck=‘. When a client connects to a Tarantool instance, the instance sends a random salt value which the client must mix with the hashed-password before sending to the instance. Thus the original value ‘x’ is never stored anywhere except in the user’s head, and the hashed value is never passed down a network wire except when mixed with a random salt.

Note

For more details of the password hashing algorithm (e.g. for the purpose of writing a new client application), read the scramble.h header file.

This system prevents malicious onlookers from finding passwords by snooping in the log files or snooping on the wire. It is the same system that MySQL introduced several years ago, which has proved adequate for medium-security installations. Nevertheless, administrators should warn users that no system is foolproof against determined long-term attacks, so passwords should be guarded and changed occasionally. Administrators should also advise users to choose long unobvious passwords, but it is ultimately up to the users to choose or change their own passwords.

There are two functions for managing passwords in Tarantool: box.schema.user.passwd() for changing a user’s password and box.schema.user.password() for getting a hash of a user’s password.

Owners and privileges¶

Tarantool has one database. It may be called “box.schema” or “universe”. The database contains database objects, including spaces, indexes, users, roles, sequences, and functions.

The owner of a database object is the user who created it. The owner of the database itself, and the owner of objects that are created initially (the system spaces and the default users) is ‘admin’.

Owners automatically have privileges for what they create. They can share these privileges with other users or with roles, using box.schema.user.grant() requests. The following privileges can be granted:

- ‘read’, e.g. allow select from a space

- ‘write’, e.g. allow update on a space

- ‘execute’, e.g. allow call of a function, or (less commonly) allow use of a role

- ‘create’, e.g. allow box.schema.space.create (access to certain system spaces is also necessary)

- ‘alter’, e.g. allow box.space.x.index.y:alter (access to certain system spaces is also necessary)

- ‘drop’, e.g. allow box.sequence.x:drop (currently this can be granted but has no effect)

- ‘usage’, e.g. whether any action is allowable regardless of other privileges (sometimes revoking ‘usage’ is a convenient way to block a user temporarily without dropping the user)

- ‘session’, e.g. whether the user can ‘connect’.

To create objects, users need the ‘create’ privilege and at least ‘read’ and ‘write’ privileges on the system space with a similar name (for example, on the _space if the user needs to create spaces).

To access objects, users need an appropriate privilege on the object (for example, the ‘execute’ privilege on function F if the users need to execute function F). See below some examples for granting specific privileges that a grantor – that is, ‘admin’ or the object creator – can make.

To drop an object, users must be the object’s creator or be ‘admin’. As the owner of the entire database, ‘admin’ can drop any object including other users.

To grant privileges to a user, the object owner says box.schema.user.grant(). To revoke privileges from a user, the object owner says box.schema.user.revoke(). In either case, there are up to five parameters:

(user-name, privilege, object-type [, object-name [, options]])

user-nameis the user (or role) that will receive or lose the privilege;privilegeis any of ‘read’, ‘write’, ‘execute’, ‘create’, ‘alter’, ‘drop’, ‘usage’, or ‘session’ (or a comma-separated list);object-typeis any of ‘space’, ‘index’, ‘sequence’, ‘function’, role-name, or ‘universe’;object-nameis what the privilege is for (omitted ifobject-typeis ‘universe’);optionsis a list inside braces for example{if_not_exists=true|false}(usually omitted because the default is acceptable).Every update of user privileges is reflected immediately in the existing sessions and objects, e.g. functions.

Example for granting many privileges at once

In this example user ‘admin’ grants many privileges on many objects to user ‘U’, with a single request.

box.schema.user.grant('U','read,write,execute,create,drop','universe')

Examples for granting privileges for specific operations

In these examples the object’s creator grants precisely the minimal privileges necessary for particular operations, to user ‘U’.

-- So that 'U' can create spaces:

box.schema.user.grant('U','create','universe')

box.schema.user.grant('U','write', 'space', '_schema')

box.schema.user.grant('U','write', 'space', '_space')

-- So that 'U' can create indexes (assuming 'U' created the space)

box.schema.user.grant('U','read', 'space', '_space')

box.schema.user.grant('U','read,write', 'space', '_index')

-- So that 'U' can create indexes on space T (assuming 'U' did not create space T)

box.schema.user.grant('U','create','space','T')

box.schema.user.grant('U','read', 'space', '_space')

box.schema.user.grant('U','write', 'space', '_index')

-- So that 'U' can alter indexes on space T (assuming 'U' did not create the index)

box.schema.user.grant('U','alter','space','T')

box.schema.user.grant('U','read','space','_space')

box.schema.user.grant('U','read','space','_index')

box.schema.user.grant('U','read','space','_space_sequence')

box.schema.user.grant('U','write','space','_index')

-- So that 'U' can create users or roles:

box.schema.user.grant('U','create','universe')

box.schema.user.grant('U','read,write', 'space', '_user')

box.schema.user.grant('U','write','space', '_priv')

-- So that 'U' can create sequences:

box.schema.user.grant('U','create','universe')